A Notebook is all I want or Don't

Making the Case for an Interactive Orchestration Model to Construct Production-Grade Data Assets.

The tweet received strong reactions on LinkedIn and Twitter. To clarify, I quoted it as a Notebook-style development, but it is not exactly a Notebook. There is a lot of context missing in that tweet, so I decided to write a blog about it.

People have reservations about using tools like Jupytor Notebook for the production pipeline for a good reason. Let’s take a few common criticisms about running Notebook in production.

Why Not Notebook in Prod?

Lack of Version Control

Version control is one of the common themes for the case against Notebook. However, modern Notebooks like Databricks seamlessly integrate with Git to build pull requests and code review processes. It is still not good enough, but it is a good start in the right direction.

Code Reusability

The Notebook code is visible within its scope. Dependency management is also bound to the individual scope of the Notebook and prevents production-grade code quality. It is less of an issue with Jupyter Notebook, which allows the importation of other Notebook functions using the run command. Databricks notebooks have similar functionally termed shared notebooks to support this future. However, it forces us to rethink the shared lib model with the shared Notebook, which can be challenging for overall system design.

Code Execution Flow

The code execution flow in typical Python programming differs from the driven execution model. There is a deterministic entry to the execution flow, whereas, in Notebook, one can execute any random cell. The cell execution model is most often state-driven and can produce a non-deterministic response when the execution of the cell becomes out-of-order.

Lack of Unit Test Semantics

Notebook design tuned towards ad-hoc, non-standard exploration. There is no underlying semantics for unit testing the code and data testing build-in. It creates a lack of trustability in executing Notebooks in production.

Lack of Dataops Support

Data orchestration engines like Airflow are built on the underlying Python programming language semantics. Enough tooling and ecosystems are available to build a CI/ CD process and an environment-specific build and deploy model. Notebooks don’t have such semantics built-in, and it is always a challenge when someone can directly modify a production Notebook without any review process.

Where Notebook Shines?

Notebook has many advantages, but I want to highlight the top 3 from my perspective.

Interactive Development

The data asset building is an interactive process. We iteratively understand the data's semantics, structure, and distribution as we build through the data asset. The Notebook style development is a perfect way to construct the data assets.

In-Build Visualization Support

All Notebooks have built-in visualization support, which helps users understand the distribution of the data. We can generate plots and charts directly in the Notebook, making interpreting data easier and explaining it to non-technical stakeholders.

Documentation support

Notebooks support intuitive code documentation, which allows developers to include explanations, thought processes, analysis conclusions, and project goals. Many orchestration engines try supporting the Markdown for documentation, yet it is less intuitive than a Notebook.

A Growing Gap Between Development & Production

Developer productivity is vital to a data team's function in building and growing a data-driven culture. It is hard to build a data-driven culture across the organization if it takes months instead of hours to build data assets. The current mode of data pipeline development requires expert data engineers, whereas all citizen developers use notebook-style development. It is common to see the Data Engineers rewrite Notebooks from the citizen developers to bring them to production-grade support.

How are we trying to solve this problem?

Do you think Notebook is bad for production? How are we trying to bridge the development and production gap? Let’s take a couple of ways the data tools are trying to solve the problem.

YAML

Every data orchestration engine eventually shows love to YAML configuration-driven orchestration engines. Airflow successfully fought against Oozie’s XML DSL, but the pattern never dies. Dagster seems on track to bring that dream into orchestration engines.

It is pretty common to see platform engineers think they can build a simple config-driven orchestration DSL that can abstract all the complexities. As the adoption happens, the complexity grows exceptionally, eventually becoming a hard programming language to learn and adopt.

Is YAML the right approach to commoditize authoring data assets? I don’t think so.

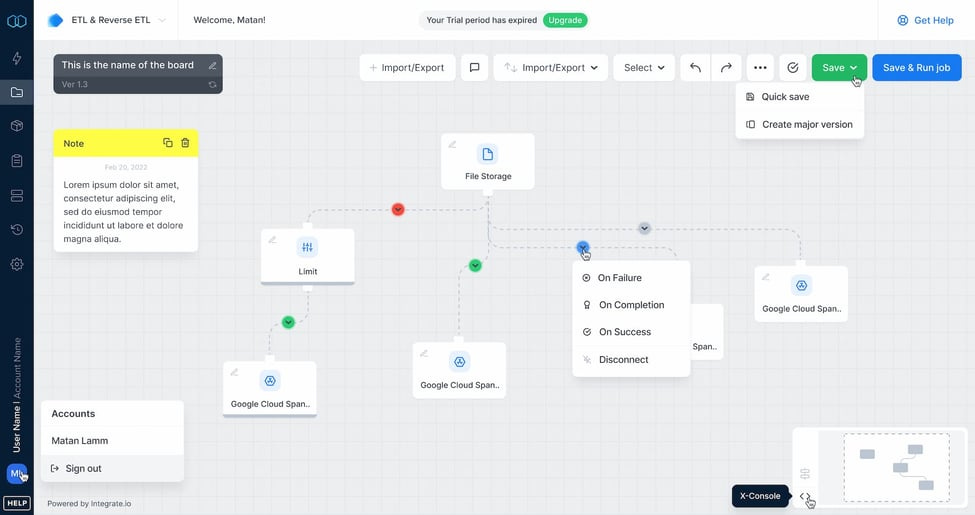

Drag & Drop Low Code UI

Using YAML and the general-purpose programming language requires some level of proficiency in coding. Here comes the no-code UI-driven data orchestration engines. All the legacy ETL tools offer UI-driven ETL solutions that lack version control, a review process, or software development methodologies.

Is the UI-driven model the right approach to commoditize authoring data assets? I don’t think so.

Why am I rooting for a Notebook-style orchestration?

I believe both the config-driven and UI-driven data orchestration are suboptimal. We have seen a significant increase in developer productivity using copilot-style development. Mckinsy even called out Gen AI talent as Your next flight risk.

The common pattern in Notebook and copilot-driven development is that they are interactive development models equipped to build data assets faster. No-code and YAML are unsuitable for Copilot-driven development since the underlying implementation limits them, whereas general-purpose programming brings much more flexibility.

Final Thoughts

As of today, Notebooks are not an optimal solution for writing and operating production data pipelines. I completely agree with that statement. However, compared with the industry's approaches to solving this problem with YAML and no-code UI, I wonder why we are not innovating more on Notebook-style development.

Combining the Copilot model with the Notebook-style interactive development model, which successfully addresses the existing flaws, will significantly impact the data engineering landscape.

All rights reserved ProtoGrowth Inc, India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employer opinions.

Notebook is great for experimentation and adhoc work.

Overall great points.

Have a look at https://github.com/mage-ai/mage-ai