Data Engineering Weekly #163

The Weekly Data Engineering Newsletter

Stephanie Kirmer: Uncovering the EU AI Act

Large language models have taken the world by storm, and every country is trying to evaluate its potential impact. India recently announced that all AI apps require government approval and dropped the plan later.

On similar trends, the article navigates to the complex EU AI Act, recently passed by the European Parliament, which introduces comprehensive regulations for machine learning models impacting EU citizens, focusing on mitigating risks to health, safety, and rights. It defines AI broadly, including any machine-based system influencing physical or virtual environments, and categorizes AI applications by risk, from banned "Unacceptable Risk AI Systems" to scrutinized "High-Risk AI Systems." Compliance is mandatory, with strict penalties for violations, emphasizing the importance of data scientists familiarizing themselves with the law to avoid prohibited AI uses and ensure ethical, safe AI development.

https://towardsdatascience.com/uncovering-the-eu-ai-act-22b10f946174

Jarkko Moilanen: Exploring the Frontier of Data Products - Seeking Insights on Emerging Standards

Data products are becoming a central part of the data pipeline strategy, bringing a new approach to building data as an asset. The author explores the evolving landscape of data product standards, highlighting the necessity for unified standards to ensure interoperability and manage the data economy effectively. It discusses the significance of data governance, sharing history, and generative AI's impact on data economy standards. It also introduces emerging standards like the Open Data Contract Standard and Data Product Descriptor Specification.

As you know, I’m fascinated by data products and the potential to change the data engineering practice. Watch out, aldefi.io, for more updates.

Mikkel Dengsøe: The cost of data incidents

Mikkel is one of my favorite authors in the data engineering space, who always brings a unique perspective. Can we measure the cost of data incidents? Mikkel developed a cost function to measure and classify the incidents' severity.

Data quality cost = (avg. $ cost of incident x # of incidents) + (% of time spent x FTE cost)

Are you measuring the cost of your data incidents? Please share your thoughts in the comments.

https://medium.com/@mikldd/the-cost-of-data-incidents-53646b588601

Eric Sandosham: The Problem with Data Governance

Data governance is probably one of the vaguely defined terminologies that inherently brought its irrelevance in the modern data era. Ha, we can’t use a modern data stack anymore 😭😭😭😭. The author makes a valid argument about the problem with data governance.

Vague Definitions and Overreach

Misplaced Focus on Policy and Compliance

Inadequate Understanding of Data Quality and Representation

I agree with these comments; we need to better define data governance in alignment with the emerging AI standards.

https://eric-sandosham.medium.com/the-problem-with-data-governance-2570f0573f3a

Sponsored: Complimentary Guide: Modern Embedded Analytics

"Employing Tailored Embedded Business Insights Through Semantic Layers

Donald Farmer, an expert in data analytics and the author of the O’Reilly book "Embedded Analytics," guides readers through a comprehensive 14-page report focused on using a semantic layer in embedded analytics. Within this report, he explores:

- The challenge of low adoption rates of BI tools among users, even with the industry's overall success.

- The potential of embedded analytics to enhance insights and efficiency across various contexts.

- Examining the advantages and drawbacks associated with different approaches to embedding analytics.

- The role of AI in shaping data experiences and the crucial role a semantic layer plays in ensuring accuracy in LLMs.

https://cube.dev/embedded-analytics-guide

Netflix: Supporting Diverse ML Systems at Netflix

Netflix writes about utilizing Metaflow, an open-source framework, to support a broad spectrum of ML and AI applications, streamlining the transition from prototype to production. Metaflow's integration with Netflix's data, compute, and orchestration platforms enables scalable and efficient deployment of diverse ML projects. This ecosystem supports domain-specific needs across Netflix, highlighting the company's commitment to leveraging advanced technology for data science, machine learning, and AI to enhance various business facets, from content recommendation to operational efficiency.

https://netflixtechblog.com/supporting-diverse-ml-systems-at-netflix-2d2e6b6d205d

Meta: Building Meta’s GenAI Infrastructure

The article discusses building Meta's GenAI infrastructure, a system designed to train large AI models like Llama 3 efficiently. It covers hardware, software, storage, and network design choices for high throughput and reliability. Notably, Meta is committed to open source and open computing principles and aims to have 350,000 NVIDIA H100 GPUs by the end of 2024!!!

https://engineering.fb.com/2024/03/12/data-center-engineering/building-metas-genai-infrastructure/

Superlinked: Vector DB Comparison

Vector databases are a new class designed to efficiently store and query high-dimensional vector representations of data, like embeddings from LLMs. As LLMs become more widely adopted, there is a growing need for scalable solutions to store and search through the vast amounts of data ingested and generated by these models. The article compares all the VectorDB available in the market.

https://superlinked.com/vector-db-comparison/

MotherDuck: Differential Storage: A Key Building Block For A Duckdb-Based DataWarehouse

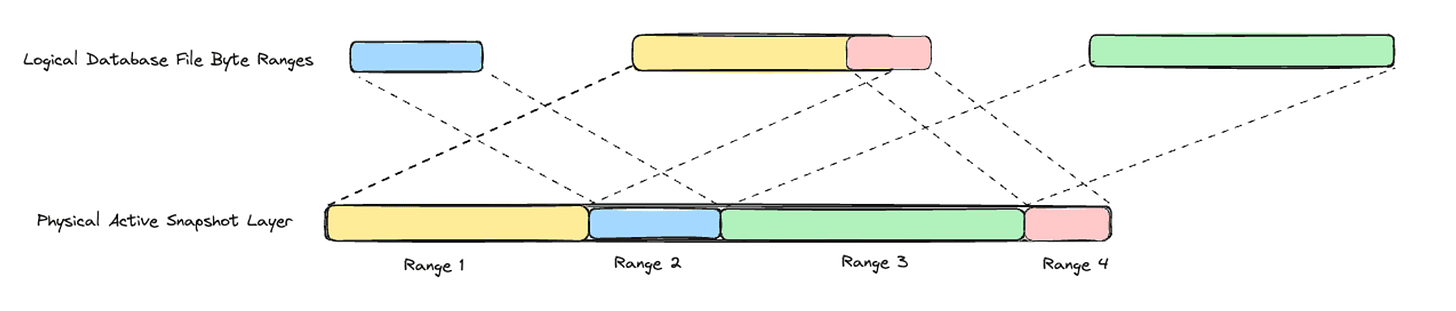

Differential storage is a technique used in databases and file systems to reduce the storage space required by taking advantage of data redundancy. Here's how it works:

When new data is written, only the differences (deltas) from the existing data are calculated and stored instead of storing the entire file or data object.

The original data and the stored deltas are combined to reconstruct the full data.

MotherDuck writes an excellent article about integrating differential storage in DuckDB.

https://motherduck.com/blog/differential-storage-building-block-for-data-warehouse/

Alibaba: Best Practices for Ray Clusters - Ray on ACK

Ray is gaining a lot of traction as the Python-based AI workloads increase. AnyScale writes why the leading companies are betting on Ray. The article covers the creation of Ray clusters within ACK for efficient AI and Python application scaling, integrating with various Alibaba Cloud services for improved log management and observability. Additionally, it emphasizes the use of Ray and ACK auto scalers for dynamic resource scaling, enhancing computing resource efficiency and utilization for machine learning and other intensive computational workloads

https://www.alibabacloud.com/blog/best-practices-for-ray-clusters---ray-on-ack_600925

Redhat: Kafka tiered storage deep dive

Kafka-tiered storage is a system design that utilizes S3 as supported storage for its tiered architecture. The article details the tiered storage approach's technical architecture and benefits, including better resource utilization and cost savings while maintaining Kafka's performance and scalability.

https://developers.redhat.com/articles/2024/03/13/kafka-tiered-storage-deep-dive

All rights reserved ProtoGrowth Inc, India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employer” opinions.