Data Engineering Weekly #197

The Weekly Data Engineering Newsletter

Slack: Empowering Engineers with AI

In our most recent internal developer survey, 65% of respondents said they prefer an IDE as the primary platform to engage with generative-AI for development tasks.

Companies rapidly adopt Gen-AI into their developer workflow and internal knowledge management to improve productivity. Slack provides an excellent overview of this process. I predict Gen-AI will power more specialized IDE to improve developer productivity.

https://slack.engineering/empowering-engineers-with-ai/

Sebastian Raschka: Understanding Multimodal LLMs

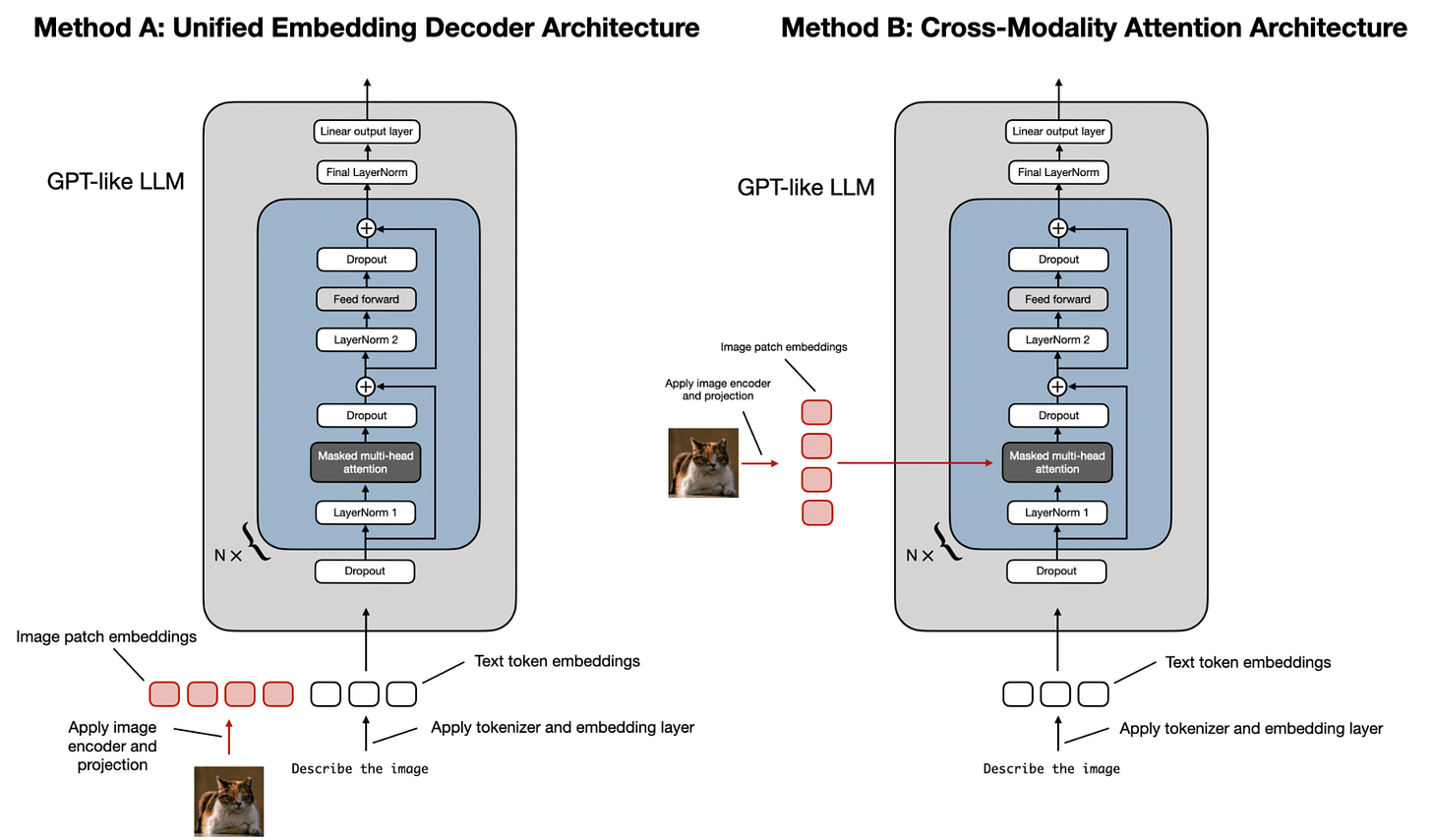

the author writes an excellent overview of understanding multimodel LLMs. The blog outlines two main approaches for building these models: the Unified Embedding Decoder Architecture and the Cross-Modality Attention Architecture. The article then reviews recent research papers on multimodal LLMs, including models like Llama 3.2, Molmo, NVLM, Qwen2-VL, Pixtral, MM1.5, Aria, Baichuan-Omni, Emu3, and Janus, highlighting their approaches and unique characteristics.

https://sebastianraschka.com/blog/2024/understanding-multimodal-llms.html

Hamel: Creating a LLM-as-a-Judge That Drives Business Results

The author writes a comprehensive guide on creating an effective large language model (LLM) as a judge for evaluating AI products, focusing on the process of "Critique Shadowing." The author outlines a seven-step process, starting with identifying a Principal Domain Expert, generating diverse datasets, and having the expert provide detailed critiques alongside pass/fail judgments. The blog highlights the importance of iterating on this process, continuously refining the LLM judge by learning from the expert's insights, and ensuring alignment with business goals.

Creating a LLM-as-a-Judge That Drives Business Results

Event Alert: IMPACT Summit

If you haven't registered for the IMPACT Summit yet, now's the perfect time 🔈

Here’s what we’ve got in store:

- A half-day virtual event created to elevate your 2025 data strategy

- Sessions jam-packed with industry experts sharing how they're driving data and AI adoption

- Practical tips and best practices from Monte Carlo customers

- Opportunities to connect and network with other data professionals

- Giveaways and raffles for attendees, including three All-Access subscriptions to DataExpert.io!

- And more!

What are you waiting for? Register for IMPACT today!

Alireza Sadeghi: The History and Evolution of Open Table Formats

I am always happy to read the tech history behind a tech evaluation. The blog is an excellent overview of Lakehouse formats, initiated by the Apache Hudi project from Uber, and walks through the evolution of features such as transactional guarantees and the latest unified table format.

https://alirezasadeghi1.medium.com/the-history-and-evolution-of-open-table-formats-0f1b9ea10e1e

Uber: Presto® Express: Speeding up Query Processing with Minimal Resources

Uber is possibly one of the largest Presto clusters in operations. Uber writes about express queries, such as any Presto query, that can be finished within 2 minutes. To identify express queries, we developed a method using historical data to predict whether an upcoming query is an express query.

https://www.uber.com/blog/presto-express/

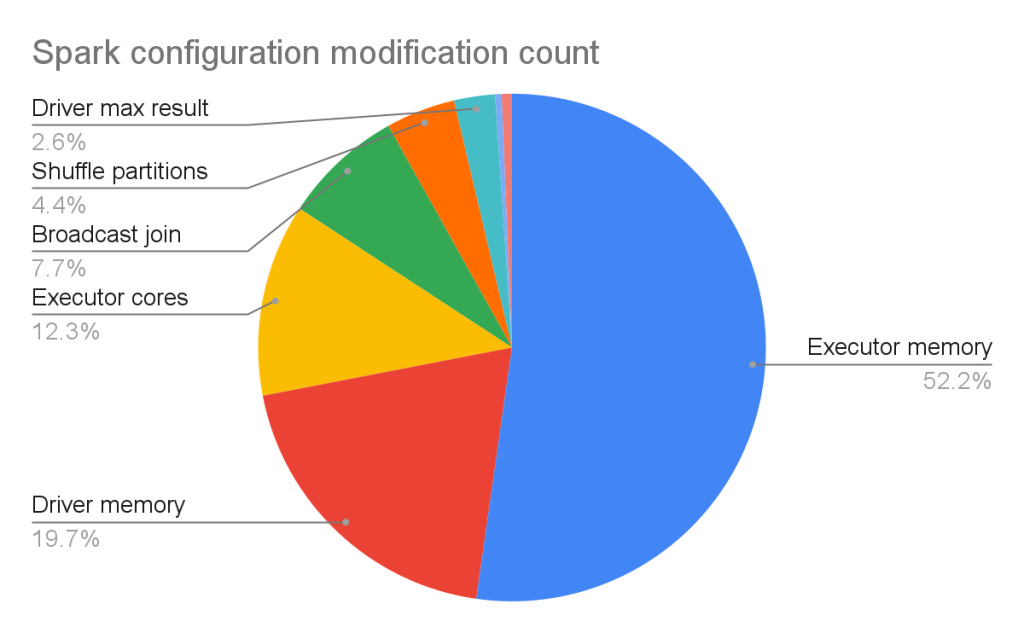

LinkedIn: Right-sizing Spark executor memory

In another optimization story, LinkedIn writes about right-sizing the spark executor memory. TIL about Deadline-aware Preemptive Job Scheduling. The blog narrates how LinkedIn achieved

Close the gap between allocated and utilized executor memory.

Minimize execution failures due to executor OOM errors.

https://www.linkedin.com/blog/engineering/infrastructure/right-sizing-spark-executor-memory

Atheon Analytics: how we modified dbt’s incremental materialization to more than halve execution time for incremental loads

The incremental data processing from dbt triggers some interesting conversations. Thanks to the dbt community, the incremental model is getting the required attention. In the second part of the blog from Atheon Analytics, the author describes some of the challenges with the dbt incremental materialization and the internal optimization framework that helped to halve the execution time.

Harness: From dbt to SQLMesh

The story from Harness is possibly the first migration I noticed from dbt to SQLMesh. The author highlights the critical features in SQLMesh that motivate them to migrate.

dbt’s Jinja templating and non-deterministic macros

Elimination of YAML

Plan-Apply workflow

https://www.harness.io/blog/from-dbt-to-sqlmesh

Astrafy: Dynamic Data Pipelines with Airflow Datasets and Pub/Sub

Airflow is increasing support for data-aware scheduling as it takes a more prominent role in the upcoming releases. The blog is an excellent overview of building dynamic data pipelines with Airflow datasets and pub/sub.

https://medium.astrafy.io/dynamic-data-pipelines-with-airflow-datasets-and-pub-sub-d91c81d75f51

Yelp: Loading data into Redshift with DBT

It is good to see a blog about Redshift unrelated to migrating.

We primarily used Spark jobs to read S3 data and publish it to our in-house Kafka-based Data Pipeline (which you can read more about here) to get data into both Data Lake and Redshift. 🤔🤔🤔🤔🤔

https://engineeringblog.yelp.com/2024/11/loading-data-into-redshift-with-dbt.html

All rights reserved ProtoGrowth Inc, India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employer” opinions.