Data Engineering Weekly #212

The Weekly Data Engineering Newsletter

Annual Report: The State of Apache Airflow® 2025

DataOps on Apache Airflow® is powering the future of business – this report reviews responses from 5,000+ data practitioners to reveal how and what’s coming next.

Editor’s Note: Data Council 2025, Apr 22-24, Oakland, CA

Data Council has always been one of my favorite events to connect with and learn from the data engineering community. Data Council 2025 is set for April 22-24 in Oakland, CA. As a special perk for Data Engineering Weekly subscribers, you can use the code dataeng20 for an exclusive 20% discount on tickets!

https://www.datacouncil.ai/bay-2025

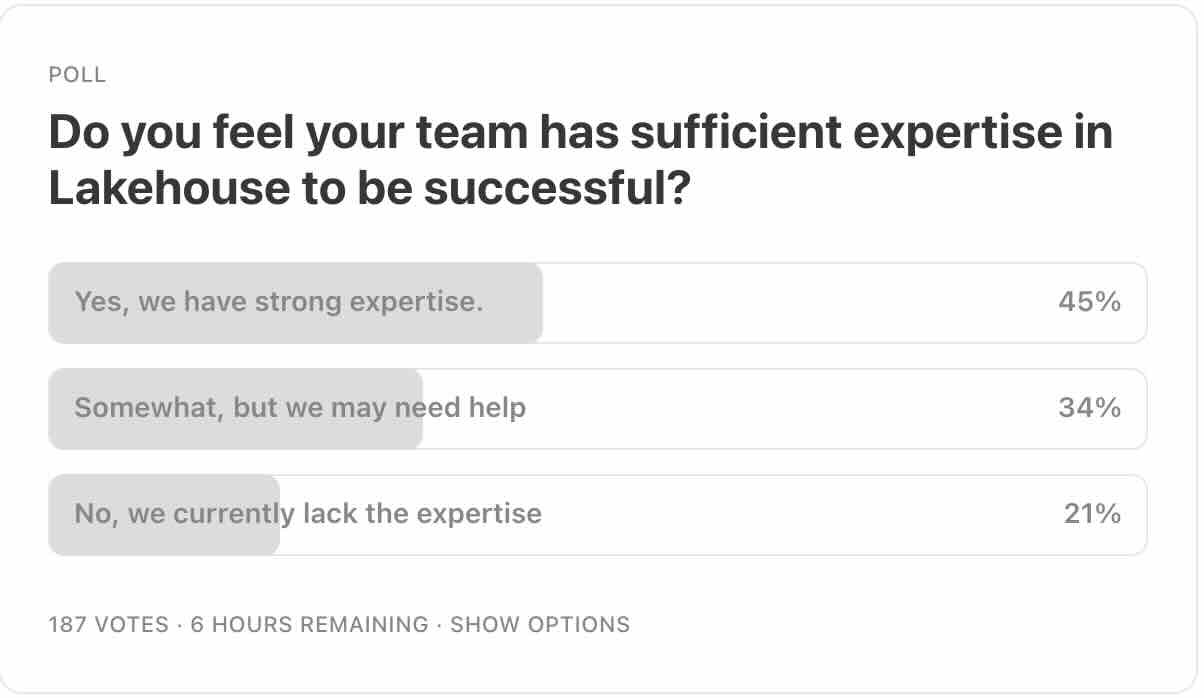

The poll results show that while 45% of respondents feel confident in their Lakehouse expertise, a majority (55%) recognize gaps in knowledge or need support. The poll indicates that while adoption is growing, there is still a significant need for learning, collaboration, and assistance in effectively leveraging Lakehouse technology. The key takeaway is that Lakehouse is gaining traction, but many teams are still in the learning phase.

Shruti Gandhi: The Infrastructure Behind AI's 'App Layer'

The most valuable AI applications are not standalone but integrate deeply with existing workflows and data sources. The author emphasizes funding the crucial underlying infrastructure for AI to function effectively. The true value lies in companies building data pipelines, model adaptation tools, and integration frameworks rather than user interfaces.

https://www.linkedin.com/pulse/infrastructure-behind-ais-app-layer-shruti-gandhi-h1jgc/

Airbnb: Accelerating Large-Scale Test Migration with LLMs

Another LLM productivity success story. Airbnb describes migrating nearly 3,500 React component test files from Enzyme to React Testing Library (RTL) using a combination of large language models (LLMs) and automation. The blog narrates the process involving a step-based state machine with automated validation and LLM-driven refactoring, retry loops with dynamic prompting, providing extensive context to the LLM (including related code, examples, and guidelines), and a "sample, tune, sweep" strategy for iterative improvement, ultimately achieving 97% automated migration in six weeks, significantly faster and more cost-effective than the initially estimated 1.5 years of manual effort!!!.

https://medium.com/airbnb-engineering/accelerating-large-scale-test-migration-with-llms-9565c208023b

Square: RoBERTa Model for Merchant Categorization at Square

Square writes about using a RoBERTa-based machine learning model to improve merchant categorization accuracy, crucial for personalized product experiences, business strategy, growth, product eligibility, and accurate interchange fees. The model leverages high-quality training data, the RoBERTa architecture, and post-onboarding signals; it significantly outperforms previous methods (with a ~30% absolute accuracy improvement), and the article describes the data preprocessing, model creation with Databricks and Hugging Face, inference optimization using multiple GPUs, PySpark, batch size optimization, and incremental predictions, and finally presents accuracy improvements across various business categories.

https://developer.squareup.com/blog/roberta-model-for-merchant-categorization-at-square/

The State of Apache Airflow® 2025

We asked 5,000+ data engineers how they use Airflow. What we learned? Airflow has never been more critical for data operations– or more important to their careers.

Check out the full report for more insights and DataOps trends!

https://www.astronomer.io/airflow/state-of-airflow

Intuit: Revolutionizing Knowledge Discovery with GenAI to Transform Document Management

Intuit writes about using a dual-loop system to build a GenAI-powered pipeline to improve knowledge discovery in its technical documentation. The inner loop enhances document quality and structure through GenAI plugins (analyzer, improver, style guide, discoverability, augmentation). The outer loop improves information retrieval and answer synthesis via embedding, search, and answer plugins. This approach is interesting, and I wonder how data catalogs can use it.

Tecton: Understanding the Feature Freshness Problem in Real-Time ML

For real-time ML applications, the most recent data often holds the most predictive power. Feature freshness, defined as "the time between when new data becomes available and when a model can use it for prediction," is crucial for capturing and acting on these recent signals. Stale or delayed features can lead to missed fraud detection, irrelevant recommendations, and poor decision-making. Tecton writes one such case study of how HomeToGo evolves its architecture from batch inference to real-time.

https://www.tecton.ai/blog/understanding-the-feature-freshness-problem-in-real-time-ml/

AWS: An introduction to preparing your own dataset for LLM training

Everything in AI eventually comes down to the quality and completeness of your internal data. The article provides an introduction to preparing datasets for Large Language Model (LLM) training, covering data preprocessing (extracting text from various formats like HTML, PDF, and Office documents, and filtering low-quality content), deduplication (using techniques like CCNet, MinHash, and Locality Sensitive Hashing), and creating datasets for fine-tuning. It discusses dataset considerations (relevance, annotation quality, size, ethics, data cutoffs, modalities, synthetic data), formats for instruction and preference tuning, synthetic data creation (Self-Instruct), data labeling approaches (human, LLM-assisted, cohort-based, RLHF-based), and data processing architectures using Amazon Web Services.

Apache Arrow: Data Wants to Be Free: Fast Data Exchange with Apache Arrow

Data exchange is critical when discussing AI and the need for data quality. Apache Arrow continuously makes a big impact on data exchange. The blog narrates how Apache Arrow offers better data serialization efficiency and avoids design pitfalls from the past.

https://arrow.apache.org/blog/2025/02/28/data-wants-to-be-free/

Shima Ghassempour: Beyond thumbs up and thumbs down: A human-centered approach to evaluation design for LLM products

Due to its non-deterministic nature, a well-designed evaluation process for Generative AI is critical. The blog stresses the need for granular, structured feedback, especially from experts, and outlines key considerations for evaluation design. These include defining clear success metrics, combining automated and human-in-the-loop evaluations, incorporating explicit and implicit feedback, designing relevant feedback mechanisms, utilizing offline and online evaluation, addressing subjectivity and variability, ensuring privacy, and conducting early and frequent user testing.

All rights reserved ProtoGrowth Inc, India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employers’ opinions.