Data Engineering Weekly #233

The Weekly Data Engineering Newsletter

Dagster Running Dagster: Event Driven Pipelines

We had a huge response to our last Dagster Running Dagster session, and we’re bringing it back with a focus on event-driven pipelines and real-time observability. Dagster engineer Nick Roach (with Alex Noonan & Colton Padden) will walk through how we orchestrate millions of daily events with real-time observability using Dagster+.

What you’ll learn:

- Designing reliable streaming workflows

- Integrating Dagster with Kafka & Flink

- Monitoring event-driven pipelines in production

Reserve your spot now.

Event Alert: Atlan Activate - Reimagining Data Catalogs & Governance in the AI Era

👑 In the internet era, content was king. In the AI era, context is king.

AI isn’t working for most enterprises- models use the wrong data, agents misinterpret business logic, and chatbots expose what they shouldn’t. The issue? Missing context about data, policies, quality, sensitivity, and meaning.

On August 21st at 1 PM ET, Atlan will unveil its next chapter at Activate- a virtual launch event showcasing how metadata infrastructure can make AI trustworthy, compliant, and context-aware.

See how Atlan’s latest innovations- from AI Governance and Data Quality Studios to the world’s first Metadata Lakehouse — are redefining how enterprises prepare AI for real-world use cases.

👉 Register here: https://atlan.com/activate

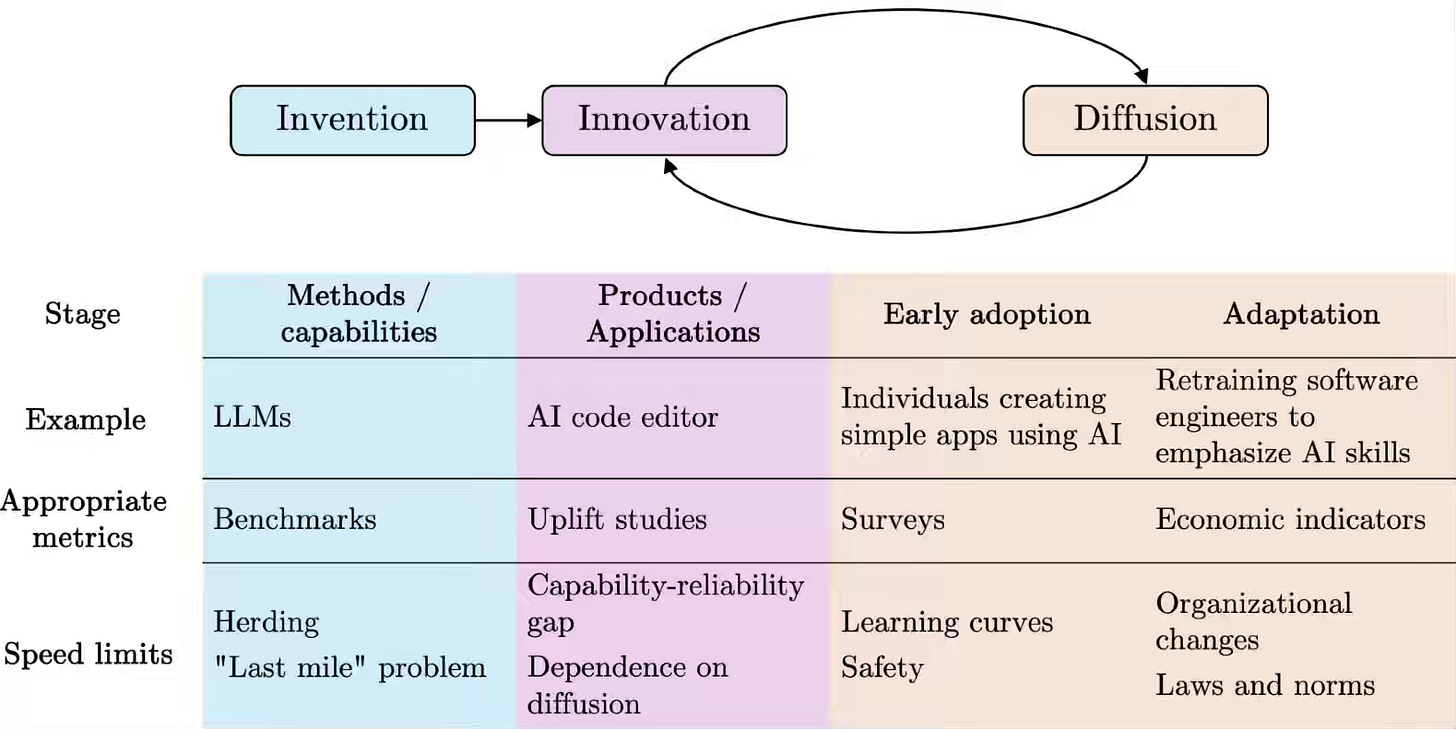

Arvind Narayanan & Sayash Kapoor: AI as Normal Technology

The authors write about why AI should be understood as a “normal technology” rather than a looming superintelligence. The essay details how AI’s impact will diffuse slowly, constrained by safety, institutions, and real-world limits, and argues that risks lie less in catastrophic misalignment than in systemic harms like bias, inequality, and erosion of trust. The authors call for resilience-focused policies—emphasizing monitoring, transparency, equitable diffusion, and defensive applications—while rejecting nonproliferation approaches that centralize control and hinder competition.

https://knightcolumbia.org/content/ai-as-normal-technology

Marc Brooker: LLMs as Parts of Systems

As a system builder, I’m much more interested in what systems of LLMs and tools can do together. LLMs and code interpreters. LLMs and databases. LLMs and browsers. LLMs and SMT solvers.

I believe this represents the fundamental evaluation of system design, where incorporating a mix of non-deterministic systems thinking will pose the next significant architectural challenge.

https://brooker.co.za/blog/2025/08/12/llms-as-components.html

UserJot: Best Practices for Building Agentic AI Systems: What Actually Works in Production

Companies are increasingly adopting agentic AI systems into various parts of the business process, from coding to support engineering. The article provides practical guidelines for building AI agents using a two-tier agent model and task decomposition patterns.

https://userjot.com/blog/best-practices-building-agentic-ai-systems

Sponsored: The Data Platform Fundamentals Guide

Learn the fundamental concepts to build a data platform in your organization.

- Tips and tricks for data modeling and data ingestion patterns

- Explore the benefits of an observation layer across your data pipelines

- Learn the key strategies for ensuring data quality for your organization

Addy Osmani: An Engineer's Guide to AI Code Model Evals

The article writes about how evaluation (“evals”) underpins the systematic improvement of coding-capable AI models like Gemini or GPT. The blog details how evals act like software tests—using goldens, autoraters, and benchmarks such as HumanEval or SWE-bench—to define “what good looks like,” guide hill-climbing improvements, and prevent regressions, while cautioning against overfitting, leakage, and narrow metrics. The author emphasizes aligning evals with real-world engineering tasks, evolving test suites over time, and treating evals as both a measuring stick and a compass for building models that can not only code but also engineer.

https://addyosmani.com/blog/ai-evals/

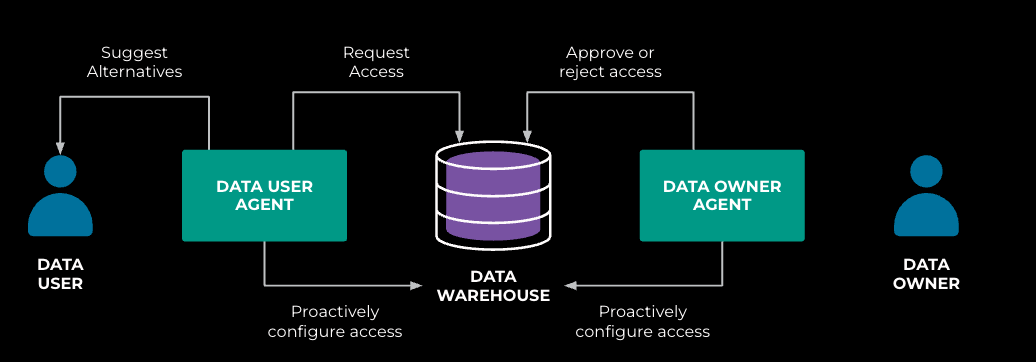

Meta: Creating AI agent solutions for warehouse data access and security

Meta writes about building multi-agent systems to streamline secure data access to the data warehouse. The blog details how user agents suggest alternatives, enable low-risk exploration, and craft access requests, while owner agents enforce SOP-based security and configure rules—all supported by LLMs for context and intention management. It emphasizes guardrails such as query-level controls, access budgets, and rule-based risk checks, plus daily evaluation and feedback loops, to balance productivity with strict security as agents become integral to data workflows.

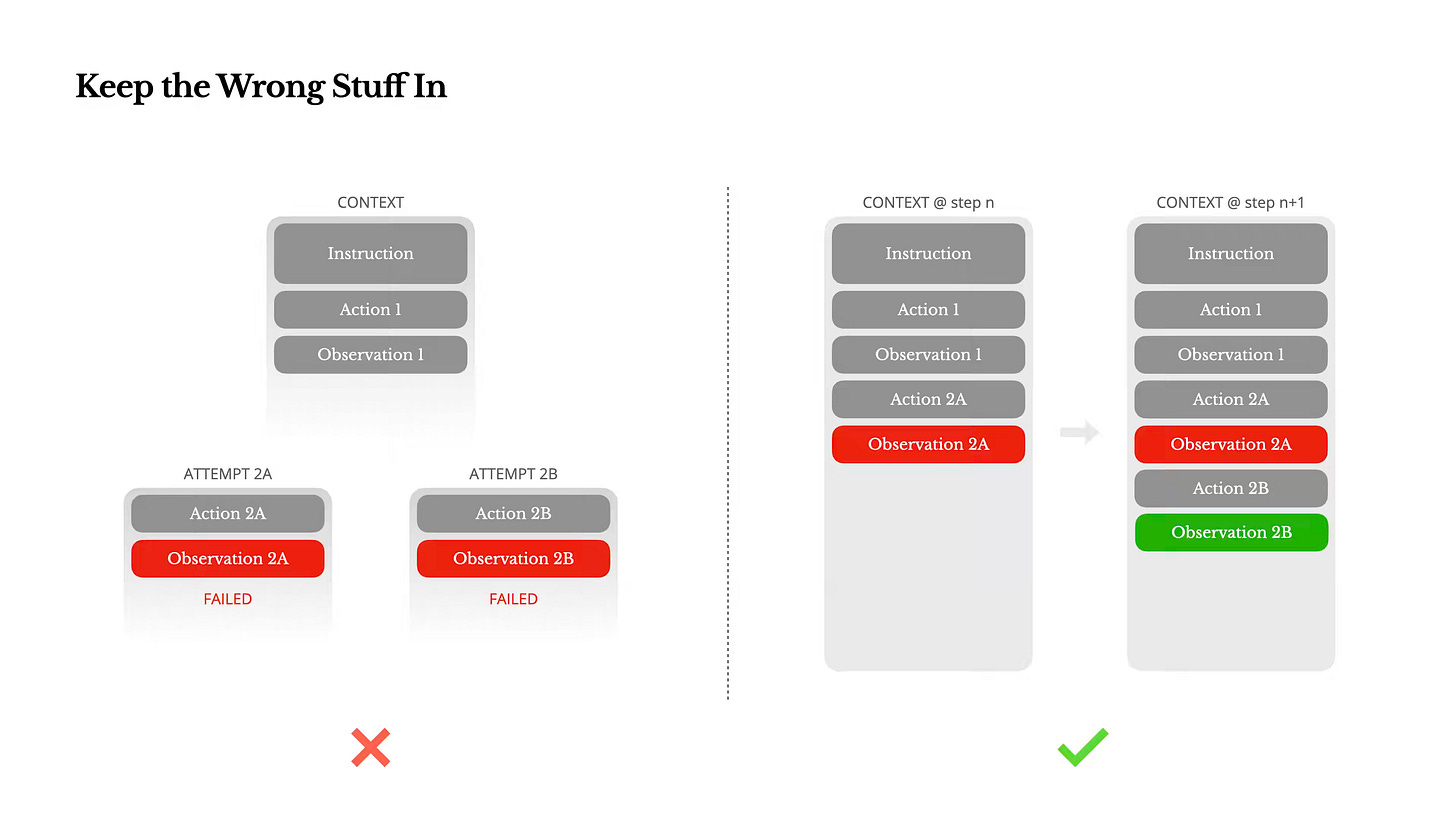

Manus: Context Engineering for AI Agents: Lessons from Building Manus

The author writes about lessons from building Manus and why context engineering, not model training, defines agent performance. The blog details strategies such as optimizing KV-cache hit rates, masking rather than removing tools, externalizing memory through the file system, reciting goals into context, preserving errors for recovery, and injecting diversity to avoid few-shot ruts. It emphasizes that shaping context—through memory, environment, and feedback—determines how fast agents run, how well they recover, and how far they scale.

https://manus.im/blog/Context-Engineering-for-AI-Agents-Lessons-from-Building-Manus

Drew Breunig: How to Fix Your Context

We understand the importance of context engineering from the previous article about the impact of context engineering in building Manus. The blog writes about strategies to mitigate failures in long contexts—such as poisoning, distraction, confusion, and clash—by treating context as a problem of information management.

The blog details six tactics: retrieval-augmented generation (RAG) for selective input, tool loadouts to limit action space, context quarantine through sub-agents, pruning and summarization to remove or condense data, and offloading to external scratchpads. The post emphasizes that every token in context influences model behavior, making disciplined information management essential even with massive modern context windows.

https://www.dbreunig.com/2025/06/26/how-to-fix-your-context.html

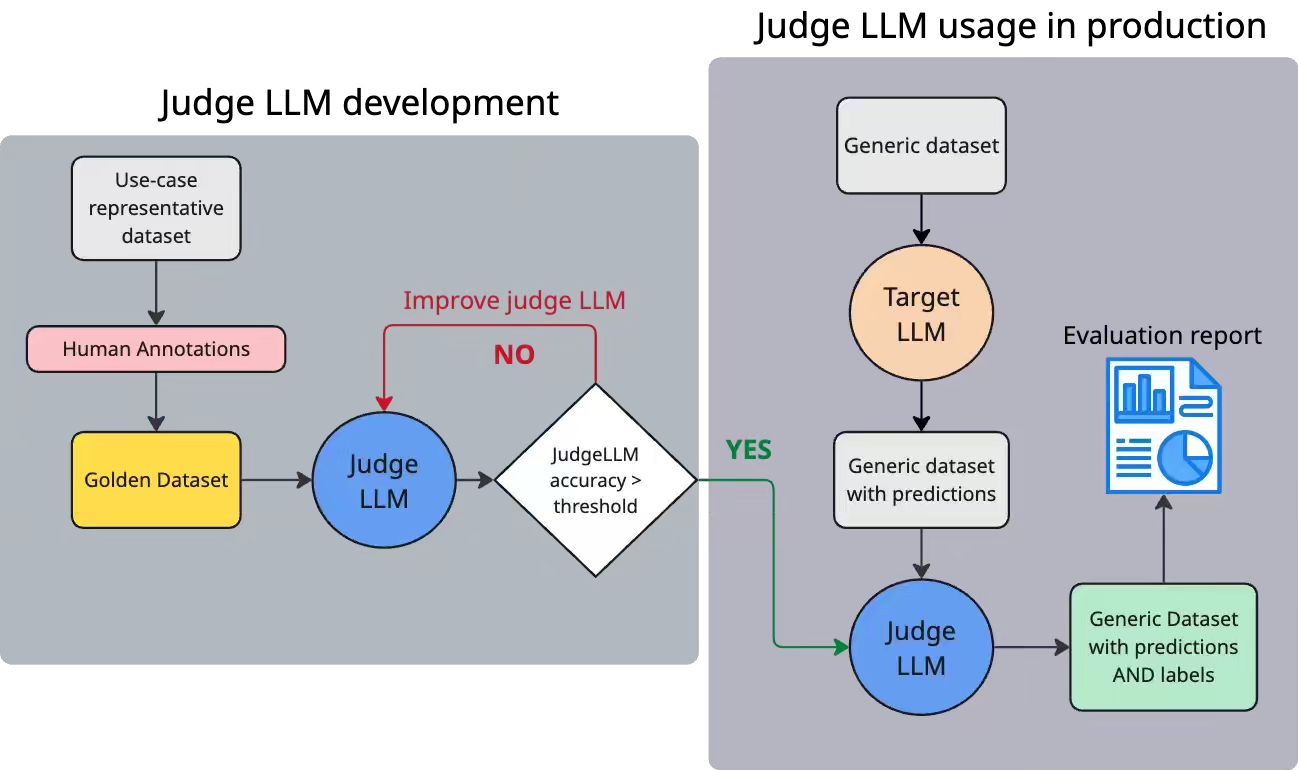

Booking.com: LLM Evaluation: Practical Tips at Booking.com

Booking.com writes about its experience developing judge-LLMs to evaluate generative AI applications at scale. The post details how high-quality “golden datasets” and rigorous annotation protocols underpin the judge-LLM framework, which uses strong models for prompt engineering and weaker models for cost-efficient monitoring, enabling metrics like clarity, accuracy, and instruction-following to be tracked continuously. The piece emphasizes that LLM-as-judge reduces reliance on human reviewers, highlights challenges in annotation and prompt design, and points to future directions such as automated prompt optimization, synthetic data generation, and evaluation methods for agentic systems.

https://booking.ai/llm-evaluation-practical-tips-at-booking-com-1b038a0d6662

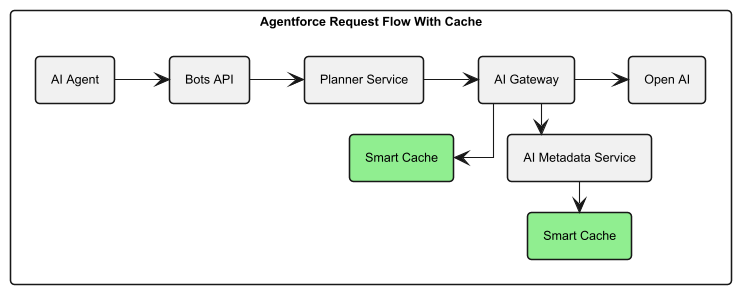

Salesforce: How Salesforce Delivers Reliable, Low-Latency AI Inference

Salesforce writes about how the AI Metadata Service (AIMS) team built a multi-layer caching system to deliver low-latency, resilient AI inference at scale. The post details how local L1 and service-level L2 caches reduced metadata fetch latency from 400ms to sub-millisecond, cut end-to-end request times by 27%, and insulated inference workflows from backend database outages.

https://engineering.salesforce.com/how-salesforce-delivers-reliable-low-latency-ai-inference/

Meta: Federation Platform and Privacy Waves: How Meta distributes compliance-related tasks at scale

Meta writes about Federation Platform and Privacy Waves, which distribute and manage compliance-related tasks at scale. The post details how the Federation Platform breaks down obligations into workstreams—detecting issues, assigning ownership, grouping tasks, and automating remediations—while Privacy Waves batches these tasks monthly to provide predictability, accountability, and improved developer experience.

All rights reserved, ProtoGrowth Inc., India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employers’ opinions.