Data Engineering Weekly #24

Weekly Data Engineering Newsletter

Welcome to the 24th edition of the data engineering newsletter. This week's release is a new set of articles that focus on Netflix's data warehouse storage optimization, Adobe's high throughput ingestion with Iceberg, Uber's Kafka disaster recovery, complexity & consideration for Real-time infrastructure, Allegro's marketing data infrastructure, Apache Pinot & ClickHouse year-in-review, Confluera's Apache Pinot adoption, BuzzFeed's data infrastructure with BigQuery, Apache Beam's data frame API & PrestoSQL is now Trino.

Netflix: Optimizing data warehouse storage

Netflix data warehouse contains hundreds of Petabytes of data stored in AWS S3, and it is growing every day. Optimizing S3 storage layout optimizations can yield faster query time, cheaper downstream processing, and increased developer productivity. Netflix writes about AutoOptimize that automates storage optimization techniques like merge, sort, and compaction.

https://netflixtechblog.com/optimizing-data-warehouse-storage-7b94a48fdcbe

Adobe: High Throughput Ingestion with Iceberg

Adobe's Experience platform writes the second part of its adoption of Apache Iceberg. The Experience platform API supports direct ingestion of batch files from the clients. Though it improves their clients' efficiency, it brings the classic small files and high-concurrent write problem. The blog is an exciting read about the buffered write approach to mitigate small files and high-concurrent writing problems and improvement done on Apache Iceberg version-hint.txt file.

https://medium.com/adobetech/high-throughput-ingestion-with-iceberg-ccf7877a413f

Iceberg at Adobe: https://medium.com/adobetech/iceberg-at-adobe-88cf1950e866

Uber: Disaster Recovery for Multi-Region Kafka at Uber

Uber has one of the largest deployments of Apache Kafka in the world, processing trillions of messages and multiple petabytes of data per day. In this blog, Uber writes about the architecture behind the disaster recovery for multi-region Kafka. Uber adopted an active-active cluster pattern where the producer publishes messages locally to regional clusters. Then the messages from regional clusters are replicated to Aggregate Clusters to provide a global view. The active-active and the active-passive event consumption pattern on the consumer side is an exciting read.

Hacking Analytics: Real-time Data Pipelines — Complexities & Considerations

The real-time infrastructure reduces the latency to analytical insight and makes the business process more agile. Simultaneously, the real-time infrastructure brings multiple other challenges from data ingestion, data processing, storage, and the serving of real-time insights. The article is an excellent read that summarizes the overall landscape of the real-time infrastructure components.

Allegro: Big data marketing. The story of how the technology behind Allegro marketing works.

Allegro writes about its data infrastructure behind the marketing feeds that integrates with the Google Merchant Center and Facebook ads. The journey from one Spark job per feed to the second generation feed generation framework & self-healing system is an exciting read.

https://allegro.tech/2020/12/bigdata-marketing.html

Apache Pinot: year-in-review 2020 and the roadmap for 2021

2020 is an exciting year for the Apache Pinot community with some excellent query optimization, support for JSON querying, and upsert support.

Apache Pinot community published year-in-review 2020 and the roadmap for 2021.

Confluera: Real-time Security Insights: Apache Pinot at Confluera

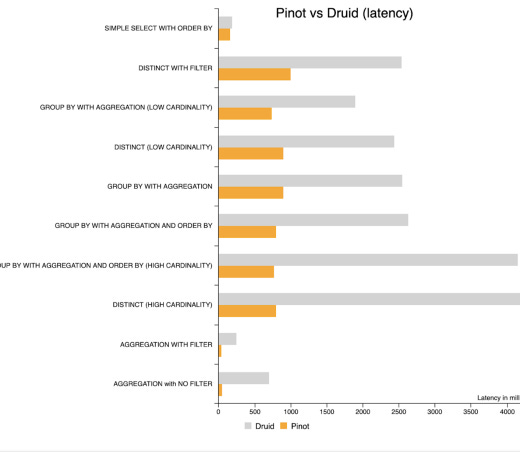

Staying with Apache Pinot, Confluera, a threat-detection and response platform, writes about Apache Pinot's adoption. The blog published a primary benchmark comparing the Apache Druid and Apache Pinot that shows Pinot performance gain with the aggregator queries.

BuzzFeed: More Data, More Problems

BuzzFeed writes about the migration to Google BigQuery and how it scales the data operations. One of the challenges with BigQuery calculates the number of slots required by each query based on its complexity and amount of data scanned. Inefficient or large queries will take a longer time to execute and potentially block or slow other concurrent queries due to the number of slots it requires. BuzzFeed writes about how the adoption of data modeling techniques and materialization helped to optimize the queries.

https://tech.buzzfeed.com/more-data-more-problems-3c585b8bc84d

Apache Beam: DataFrame API Preview now Available!

Apache Beam introduced the Beam SQL in early 2019. On that journey to simplifying the API with more familiar patterns, Apache Beam introduced DataFrame API aims to compatible with the well-known Pandas DataFrame API.

https://beam.apache.org/blog/dataframe-api-preview-available/

ClickHouse: The ClickHouse Community in 2020

ClickHouse published year-in-review 2020, with the origin story from Yandex, community overview, how to contribute, and the adoption story from Cloudflare.

https://clickhouse.tech/blog/en/2020/the-clickhouse-community/

Trino: We’re rebranding PrestoSQL as Trino

PrestoSQL rebrands as Trino after Facebook's copyright claim with PrestoDB. Trino published the commit trends comparing Trino and PrestoDB. It's sad to see one of the vital opensource in the modern data infrastructure splits in mindshare, and I hope both Trino and PrestoDB complement each other.

https://trino.io/blog/2020/12/27/announcing-trino.html

Links are provided for informational purposes and do not imply endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employers' opinions.