Data Engineering Weekly #255

The Weekly Data Engineering Newsletter

Dagster Running Dagster dives into AI analytics.

In this upcoming session, Analytics Lead Anil walks through how Compass has increased the Dagster data team's capacity, shares best practices for data modeling that work well with AI assistants (hint: nested columns and wide tables are your friends), and demos a real case where our Compass Dagster+ integration identified the root cause of a Postgres-to-Snowflake pipeline that was failing 40-50% of the time.

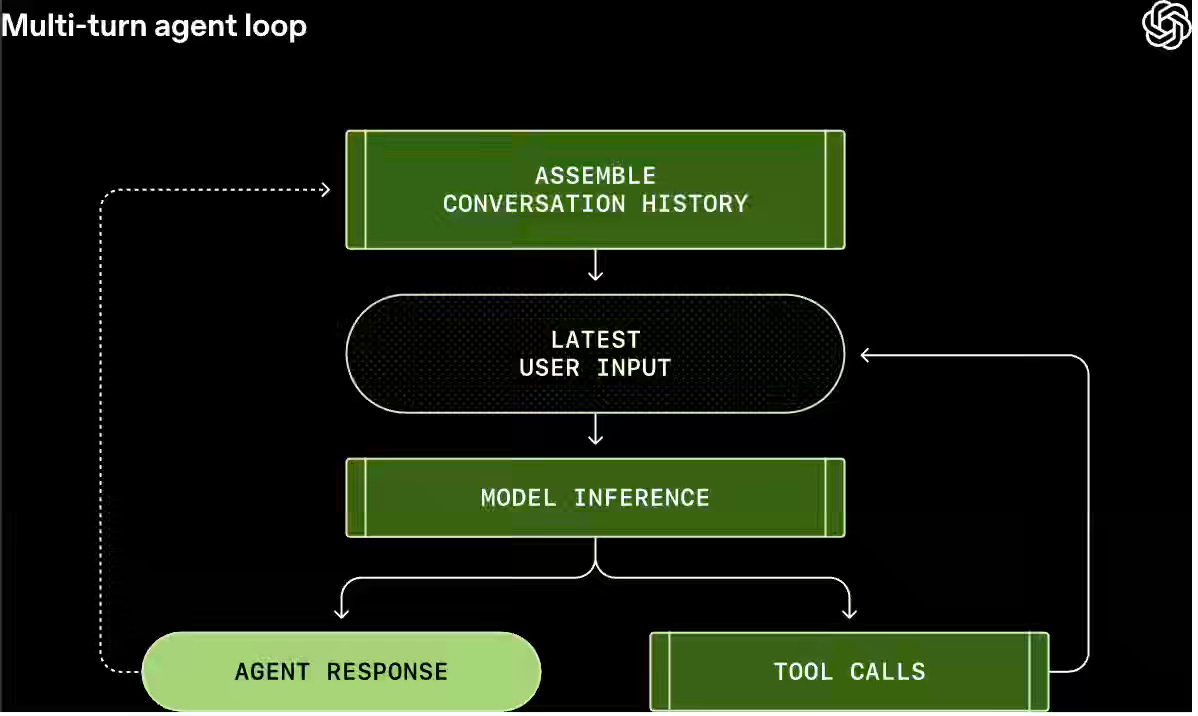

OpenAI: Unrolling the Codex agent loop

The explanations of AI agents often obscure how local tools, model inference, and user interaction are orchestrated in practice. The article breaks down the Codex CLI agent loop, detailing how prompts, tool calls, iterative inference, context compaction, and prompt caching work together to execute software tasks efficiently. By combining stateless operation, automatic context management, and flexible tool integration via MCP, Codex achieves secure, performant local agent execution without server-side session retention.

https://openai.com/index/unrolling-the-codex-agent-loop/

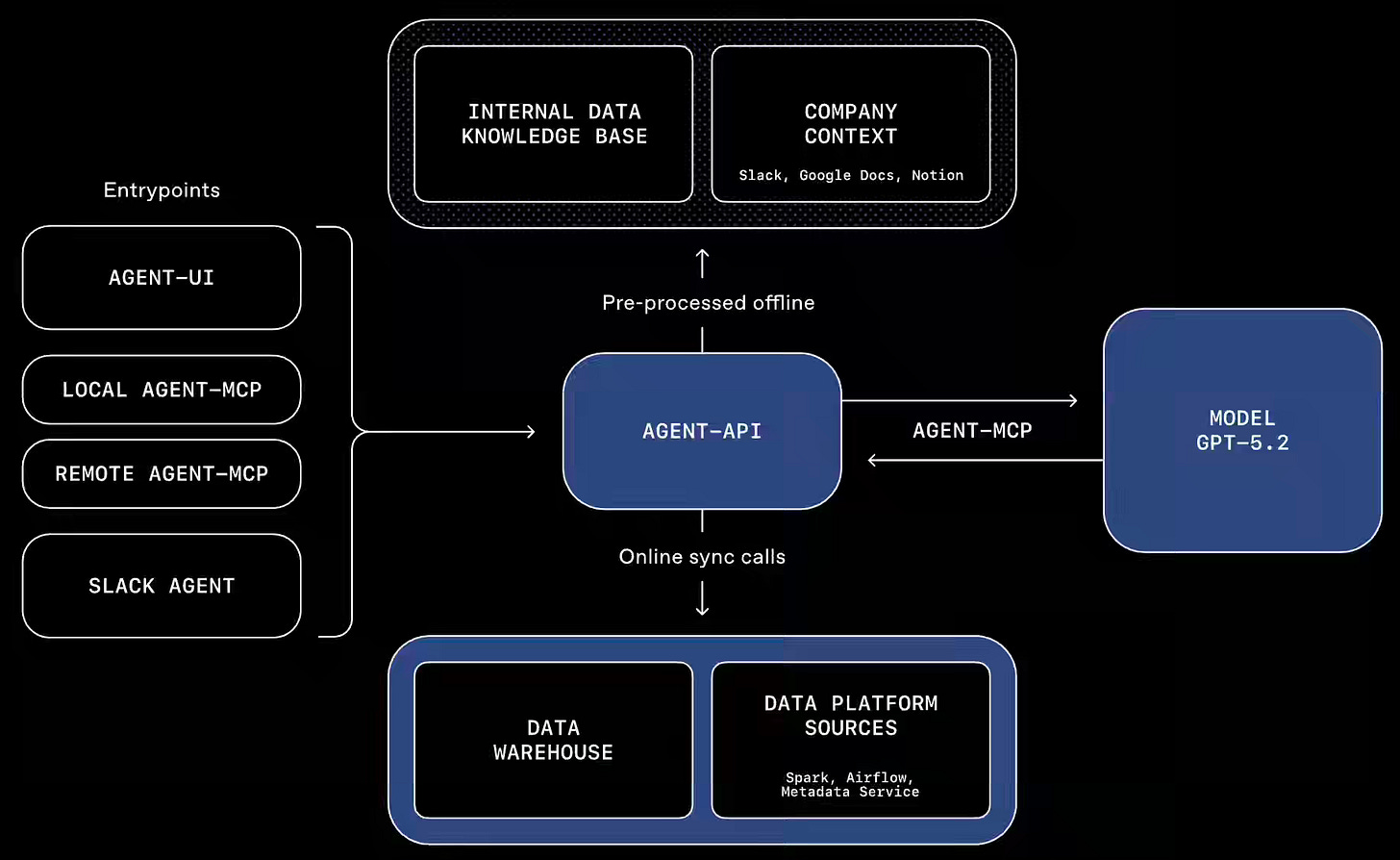

OpenAI: Inside OpenAI’s in-house data agent

OpenAI writes about its internal data agent, which uses a closed-loop, self-correcting process and multiple context layers to translate natural language into reliable queries across hundreds of petabytes of data. By grounding meaning in code, minimizing tool complexity, and enforcing pass-through permissions with continuous evaluation, the system delivers fast, secure, and reliable data access for employees at scale.

https://openai.com/index/inside-our-in-house-data-agent/

Preset: The Semantic Layer Is Back. Here’s What We’re Doing About It.

The article reads like a pitch for the present, but what I liked most is the clear analogy for what a semantic layer is and why it has failed, even though legacy tools like Business Objects do support it. Overall, I’m excited about the agents as an interface for insights and the renewed interest in the semantic layer.

https://preset.io/blog/semantic-layer-is-back/

Sponsored: How to build a data platform that's ready for AI

Traditional data platforms are becoming the biggest bottleneck when companies experiment with AI. Learn how to build a unified control plane that enables AI-driven development, reduces pipeline failures, and cuts complexity.

- Transform from Big Complexity to AI-ready architecture

- Real metrics from organizations achieving 50% cost reductions

- Introduction to Components: YAML-first pipelines that AI can build

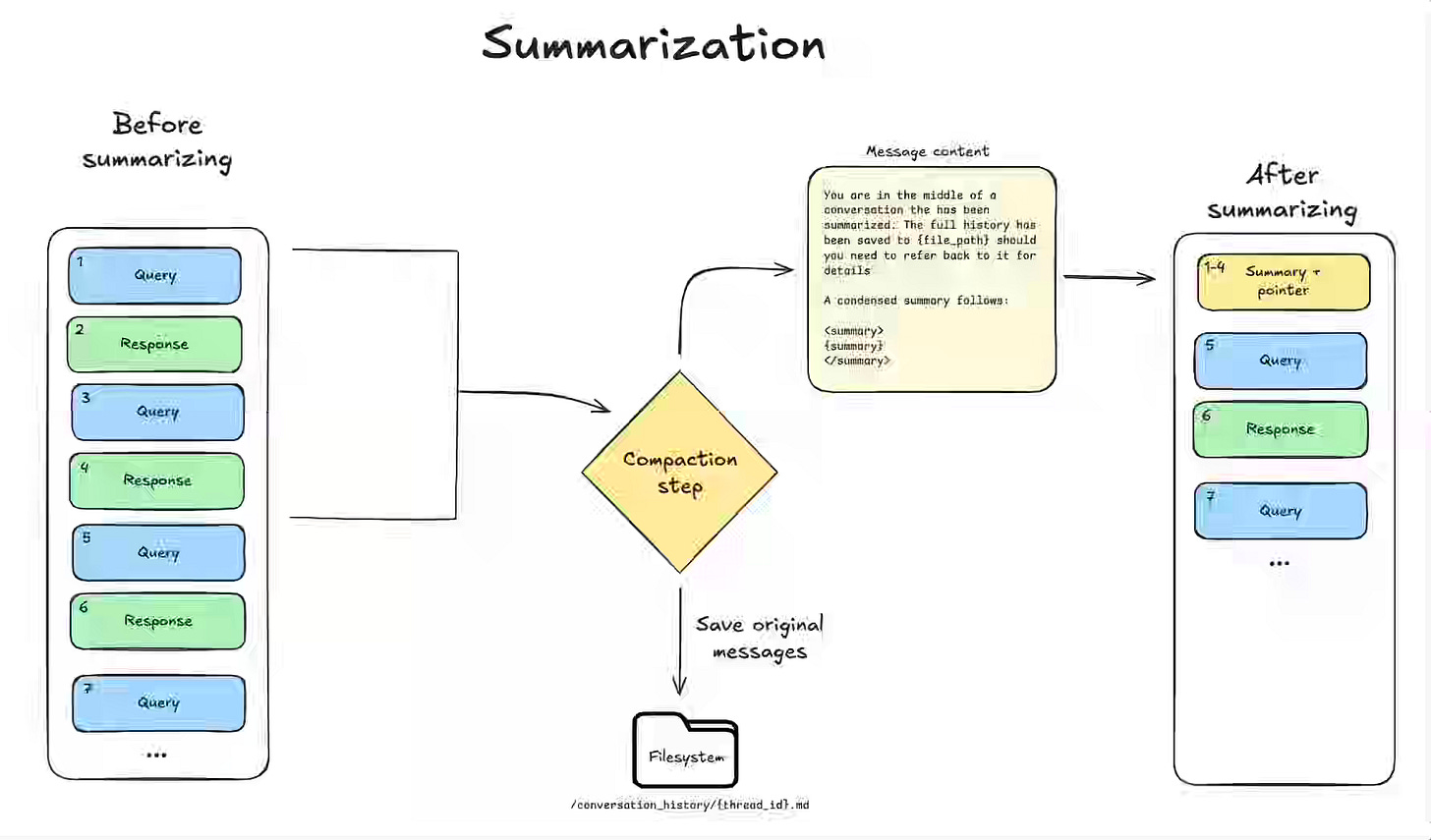

LangChain: Context Management for Deep Agents

Large AI agents risk context rot when long-running tasks exceed LLM memory limits, degrading reasoning quality. The article explains how the Deep Agents SDK actively manages context using tool input and output offloading, filesystem-backed pointers, and structured summarization to stay within token limits. Targeted evaluations ensure agents can recover critical details from compressed context and maintain task intent over extended workflows.

https://www.blog.langchain.com/context-management-for-deepagents/

Dropbox: Engineering VP Josh Clemm on how we use knowledge graphs, MCP, and DSPy in Dash

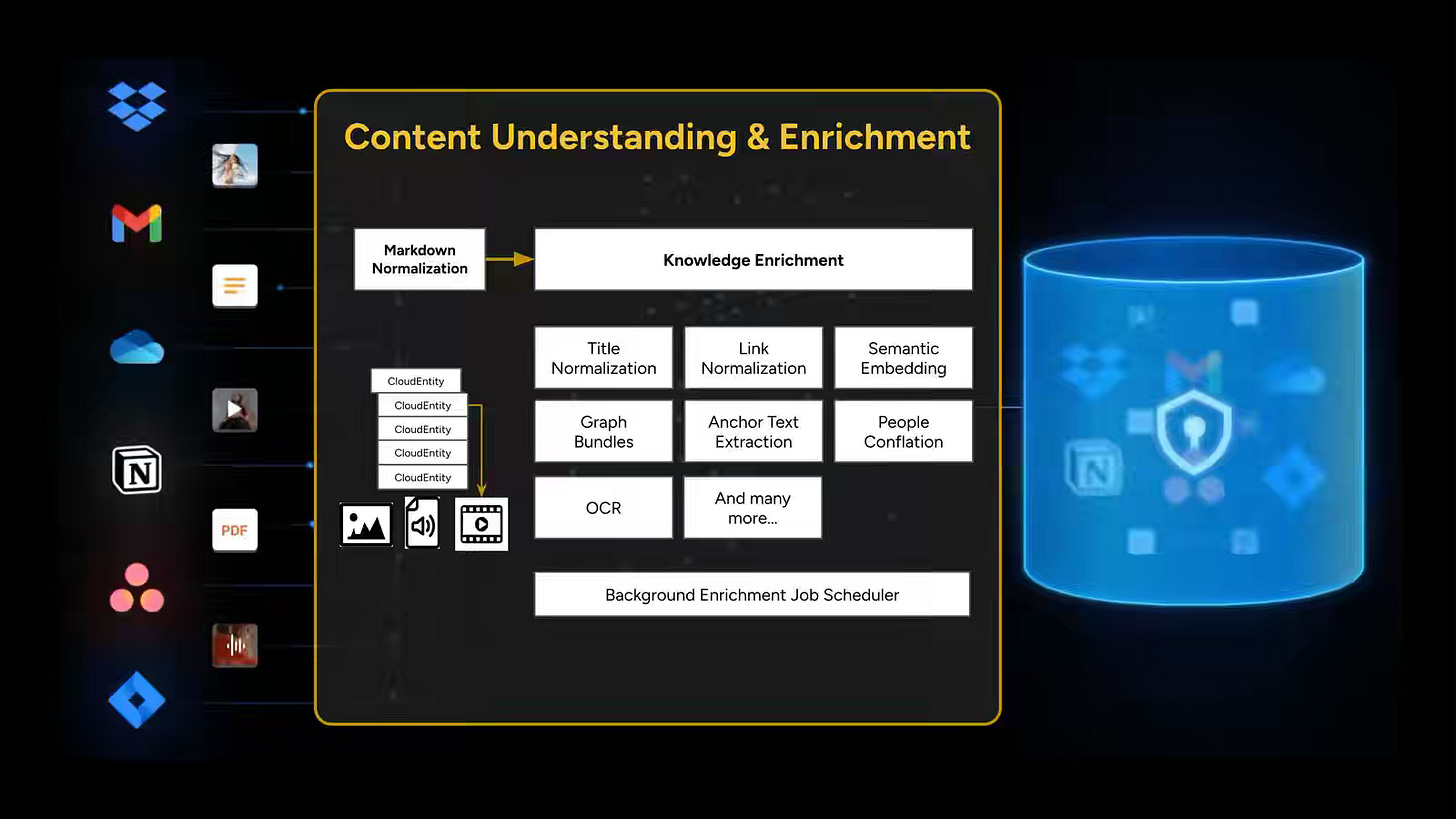

Building a universal search and agentic workspace is difficult because work data spans many tools, formats, and contexts, while LLMs face latency and context limits. The article explains how Dropbox Dash uses an index-based retrieval system, a context engine with multimodal processing, knowledge bundles, and MCP-based super tools, combined with LLM-as-a-judge and DSPy-driven prompt optimization.

https://dropbox.tech/machine-learning/vp-josh-clemm-knowledge-graphs-mcp-and-dspy-dash

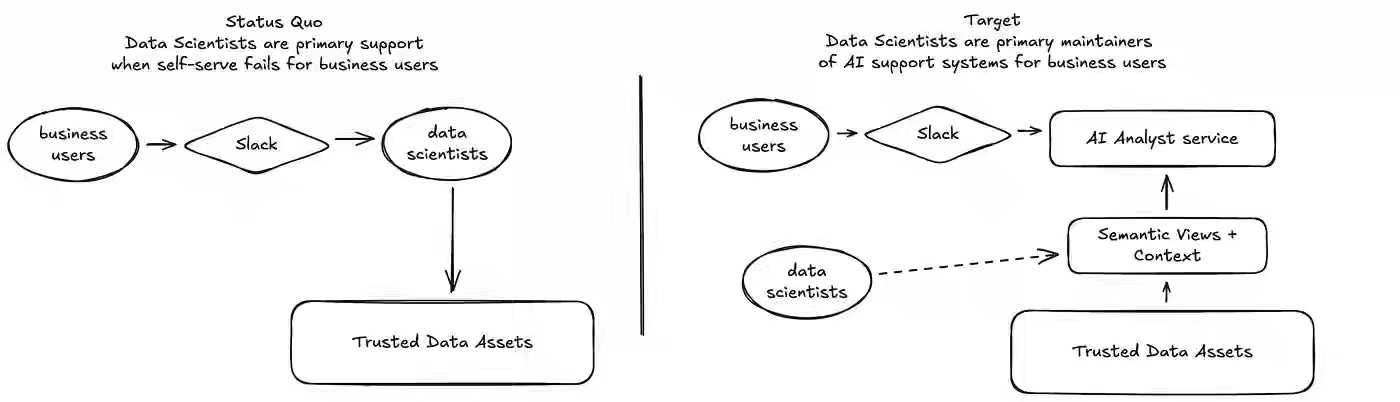

Whatnot: Lessons learned from scaling data scientists with AI

AI-driven analytics struggle to generate correct queries because raw tables lack explicit business meaning and consistent relationships. The article explains how semantic views encode business logic, table relationships, and approved data scope to give LLMs precise, machine-readable context for SQL generation. By standardizing definitions and constraining access to vetted datasets, semantic views improve query accuracy, reduce hallucinations, and make AI-assisted analytics safer and more reliable.

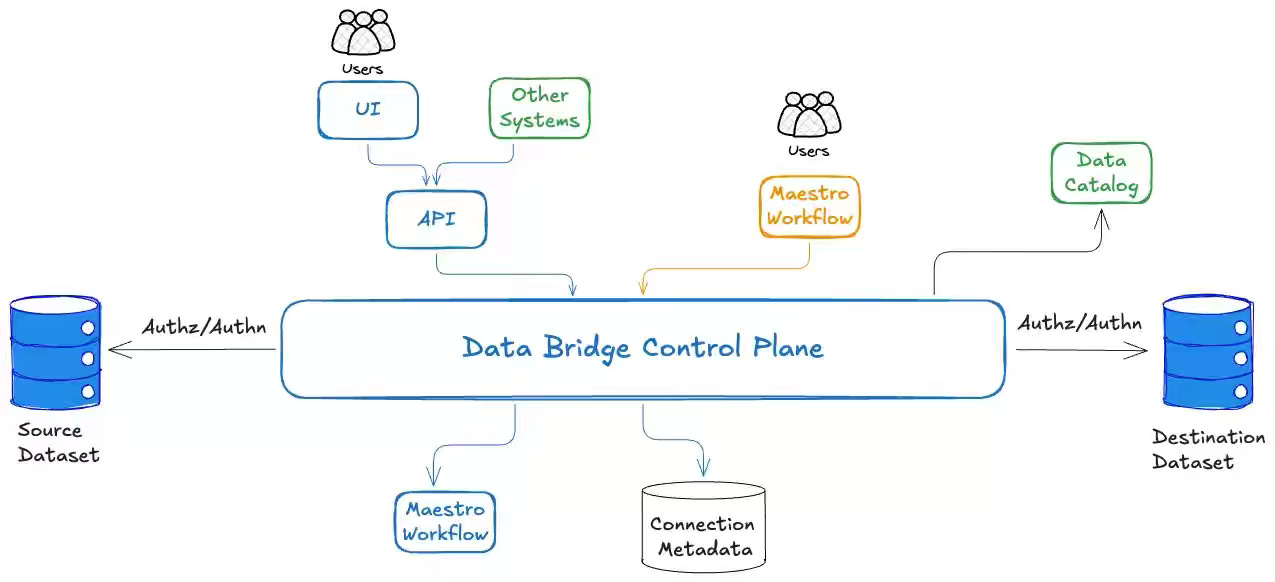

Netflix: Data Bridge: How Netflix simplifies data movement

Fragmented data movement tooling creates operational overhead, inconsistent governance, and tightly coupled implementations across large data ecosystems. The article describes how Netflix built Data Bridge as a unified control plane that separates user intent from execution, centralizes governance, and orchestrates existing data movement systems through standardized interfaces.

https://netflixtechblog.medium.com/data-bridge-how-netflix-simplifies-data-movement-36d10d91c313

LinkedIn: Contextual agent playbooks and tools: How LinkedIn gave AI coding agents organizational context

AI coding agents struggle to operate effectively without access to company-specific context, tools, and workflows. The article describes LinkedIn’s CAPT framework, which uses MCP, executable playbooks, and scalable meta-tools to connect agents to internal systems while controlling context and tool discovery. By packaging CAPT as a zero-friction local service, LinkedIn enables agents to automate debugging, incident response, data analysis, and issue triage, reducing investigation time by up to 70%.

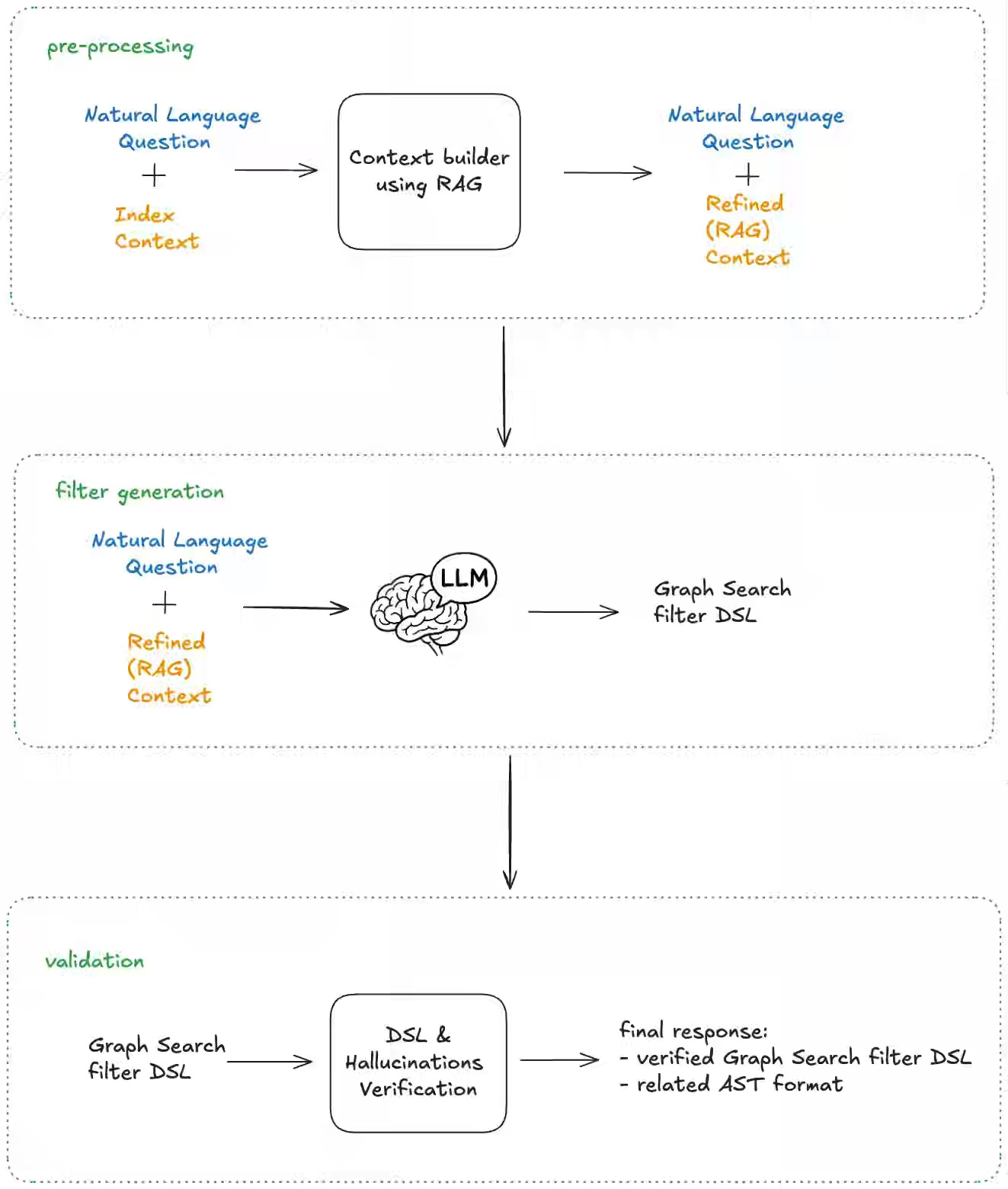

Netflix: The AI Evolution of Graph Search at Netflix: From Structured Queries to Natural Language

Enterprise search systems struggle when users must express complex filters through rigid, technical query languages. The article explains how Netflix evolved Graph Search by using LLMs to translate natural language into validated, schema-aware DSL queries with field-level RAG and AST-based verification. By visualizing AI-generated logic and supporting explicit entity selection, the platform lets users query federated data intuitively while maintaining correctness and trust.

https://netflixtechblog.com/the-ai-evolution-of-graph-search-at-netflix-d416ec5b1151

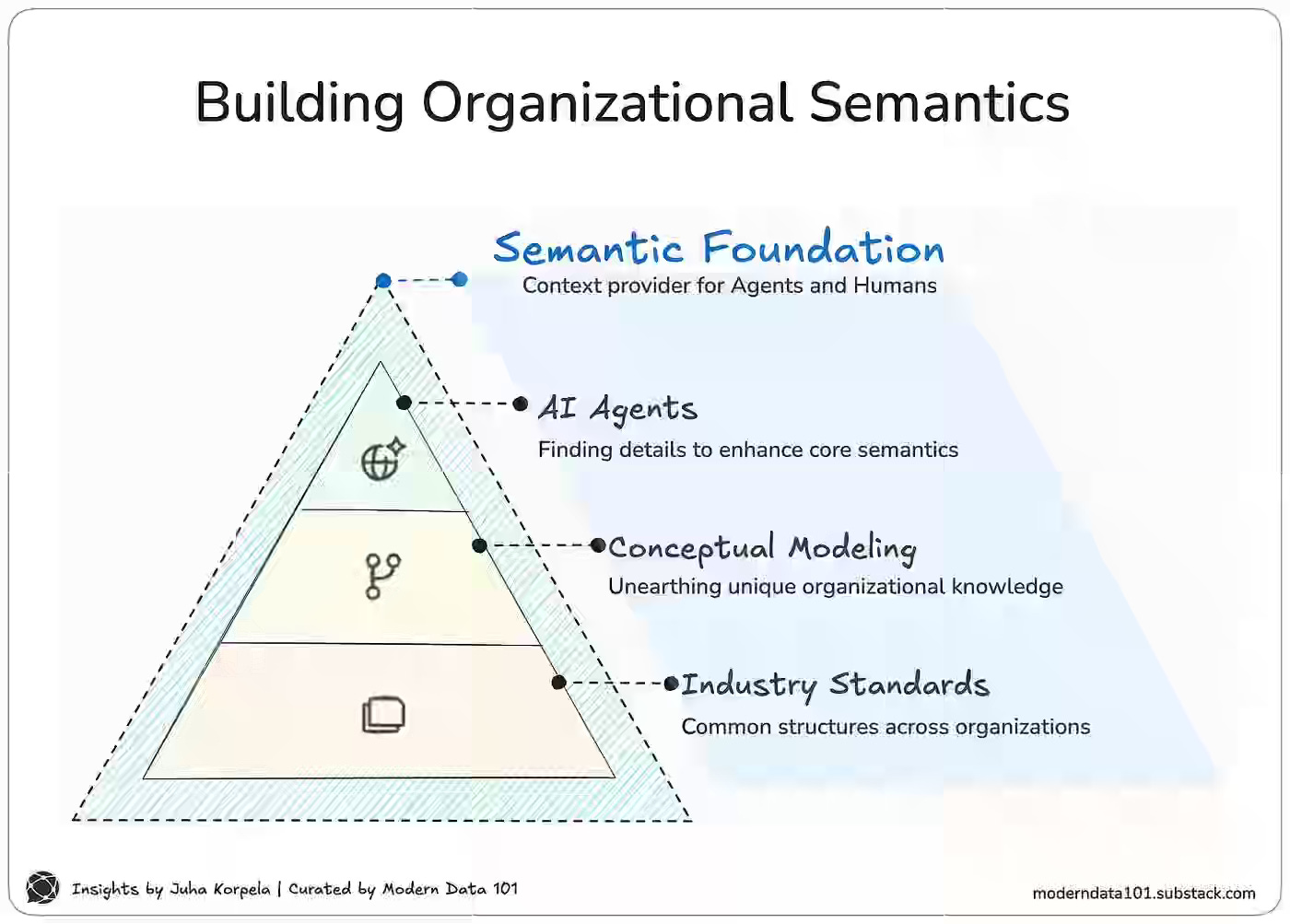

Modern Data 101: Modeling Semantics: How Data Models and Ontologies Connect to Build Your Semantic Foundations

AI-driven systems struggle without explicit semantic context to ground reasoning and reduce hallucinations. The article argues that data modeling and ontologies both capture entities and relationships and should serve as core methods for discovering and structuring organizational knowledge. By combining industry standards, conceptual modeling, and AI-assisted enrichment, teams can build a unified semantic foundation that improves both human understanding and AI accuracy.

All rights reserved, Dewpeche Private Limited. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employers’ opinions.

Really solid roundup on semantic layers and agent context managment! The connection between Netflix's Data Bridge and Dropbox's knowlege graph approach shows how centralized control planes are becoming essential for AI-ready systems. In our migration work we've found that semantic layers fail without strong governance around who updates definitions. The shift from SQL generation to validated DSL queries feels like the right pattern for enterprise trust.