The Ascending Arc of AI Agents

The Quest for Artificial General Intelligence

Artificial Intelligence (AI) is at a turning point. For decades, conversations about Artificial General Intelligence (AGI) have been met with skepticism. Yet, recent breakthroughs in model architectures, memory management, and continual learning suggest that our machines are becoming ever more capable. This article traces a timeline of key innovations, illustrating how we have moved from simple language model reasoning to interactive, context-rich, and self-improving AI agents.

Chain-of-Thought

The story begins with large language models (LLMs) that introduce the idea of chain-of-thought reasoning. Models like GPT-3 showcased remarkable language capabilities, yet they were limited by an inability to consult external sources on the fly. They would generate a chain of reasoning internally—often hidden from users—and produce answers that could sound convincing but were prone to factual hallucinations. While these LLMs represented a quantum leap from previous “bag-of-words” or purely statistical models, it quickly became clear that reasoning in isolation could lead to cascading errors and illusions of certainty.

Problem: The community realized that halting the cascade of hallucinations required more than just bigger models; it demanded a way for AI to test and ground its internal reasoning against external sources.

Reasoning + Acting

The first major pivot toward solving these issues came with the ReAct framework, which stands for Reason + Act. Instead of keeping reasoning locked away, ReAct interleaved reasoning steps with explicit actions, such as querying external APIs or searching a knowledge base. This meant the model could plan a series of steps (“I need to search for X, then interpret Y”), act on that plan, and incorporate any retrieved data back into its subsequent reasoning.

• Grounding Knowledge: By stepping into the “external world,” ReAct mitigated hallucinations. If the model’s initial assumption was off, the feedback loop allowed it to detect inconsistencies and correct course.

• Translucent Thought Process: This approach made AI more transparent; each reasoning step was directly tied to an observable action, offering new opportunities for human oversight and fine-grained control.

Outcome: ReAct established a cornerstone principle: reasoning should not be an isolated monologue but a collaborative dialogue with the world.

Open-Ended Skill Acquisition

Once models began interacting with the world, the next challenge was continuous adaptation. Traditional AI training, where a static dataset is used to teach a model once, proved insufficient for dynamic, evolving tasks. Enter VOYAGER, a system that thrives in Minecraft's open-ended environment.

• Automatic Curriculum: Instead of a single, rigid training objective, VOYAGER gradually introduced challenges scaled to its evolving competence, reminiscent of how students progress from easier to more difficult homework.

• Skill Library: Newly mastered behaviors were stored in a reusable library of code snippets, enabling cumulative knowledge. VOYAGER built a foundation for tackling more complex tasks with each skill learned.

• Iterative Prompting: Failures weren’t the end but a signal to refine. Whenever the agent faltered, it leveraged feedback from the environment to adjust how it generated or executed future code.

Outcome: VOYAGER demonstrated that lifelong learning was not just a theoretical aspiration but a practical framework: by structuring tasks, storing skills, and iterating, an AI agent could continually enrich its capabilities without restarting from scratch.

Managing Infinite Memory

As agent-based methods matured, a new bottleneck became evident: context windows. Even the most advanced LLMs could only handle a limited amount of text simultaneously—problematic for tasks involving long dialogues, extended documents, or multi-session problem-solving. That’s where MemGPT emerged, borrowing concepts from operating systems to implement a paging-like mechanism for AI memory.

• Memory Hierarchy: MemGPT distinguishes between active “on-chip” memory (the context window) and “persistent” long-term storage. Like an OS swaps pages of data in and out of RAM, MemGPT fetches relevant information and swaps out irrelevant data.

• Infinite Context: By cleverly scheduling these memory “pages,” the system can effectively manage an unbounded repository of knowledge—responding intelligently even in multi-hour or multi-day conversations.

• Complementary to Lifelong Learning: MemGPT’s architecture pairs naturally with VOYAGER-like skills, allowing an agent to recall specialized knowledge when needed.

Outcome: The dream of an “infinite context” agent started to look tangible, enabling continuous dialogue and knowledge accumulation without forgetting essential details.

Real-World Benchmarks

With models now capable of reasoning and acting (ReAct), learning over time (VOYAGER), and managing vast or ongoing context (MemGPT), the next step was to see how they performed on real-world tasks. Enter SWE-bench, a benchmark that tests AI’s ability to navigate and modify complex codebases:

• Multi-File Dependencies: SWE-bench examples require changes across multiple files, demanding a holistic understanding of the software’s architecture.

• Context-Intensive Solutions: Without something akin to MemGPT’s extended context, LLMs often produce patchwork fixes that fail to address deeper code dependencies.

• Actionable Feedback: The SWE bench environment highlights where AI agents stumble—whether it’s missed edge cases, incomplete refactoring, or oversimplified patches.

Outcome: These real-world exercises revealed critical shortcomings, helping researchers pinpoint how to refine their approaches. At the same time, it validated that combining ReAct, VOYAGER, and MemGPT features could dramatically improve success rates on complex coding tasks.

Model Distillation as a “Textbook” Cycle

Even as these agent frameworks advanced, the broader question of how knowledge moves from one AI generation to the next persisted. Model distillation offered an elegant solution—mirroring how textbooks distill expert wisdom for students:

• Teacher → Student: Large, comprehensive models act as “teachers,” encoding a wealth of information. Distilling that knowledge creates smaller, specialized “students” who can still perform at a high level.

• Iterative Refinement: Textbooks undergo new editions, and so do distilled models. Each iteration integrates fresh insights from ReAct-like grounded reasoning, VOYAGER’s skill libraries, or MemGPT’s memory management.

• Beyond Simple Compression: Effective distillation isn’t a mere downscaling exercise. It involves contextualizing knowledge, providing structured examples, and sometimes adding new insights from real-world tasks (like SWE-bench).

Outcome: This cyclical teacher-student paradigm ensures that breakthroughs in one generation are systematically transferred and evolved in the next, accelerating the collective progress of AI systems.

Convergence on AGI

The journey from pure LLM reasoning to grounded, interactive, lifelong-learning, context-adept agents signals a broader paradigm shift in AI. By addressing—one after another—the challenges of hallucination, forgetfulness, rigid task definitions, and real-world complexity, these innovations collectively pave the way for systems that behave less like static chatbots and more like adaptive co-pilots in problem-solving.

1. Reasoning + Acting (ReAct): No more sealed “thought bubbles”—agents can test their logic in the real world.

2. Lifelong Learning (VOYAGER): AI grows over time, amassing and reusing a library of skills with minimal human intervention.

3. Infinite Memory (MemGPT): Context no longer needs to be a bottleneck, enabling extended interactions and robust recall.

4. Real-World Challenges (SWE-bench): Benchmarks push agents to handle professional-grade tasks, revealing their promise and limitations.

5. Model Distillation: Knowledge seamlessly passes from one generation to the next, forging an ever more capable AI lineage.

Challenges in Agent of Agent Systems

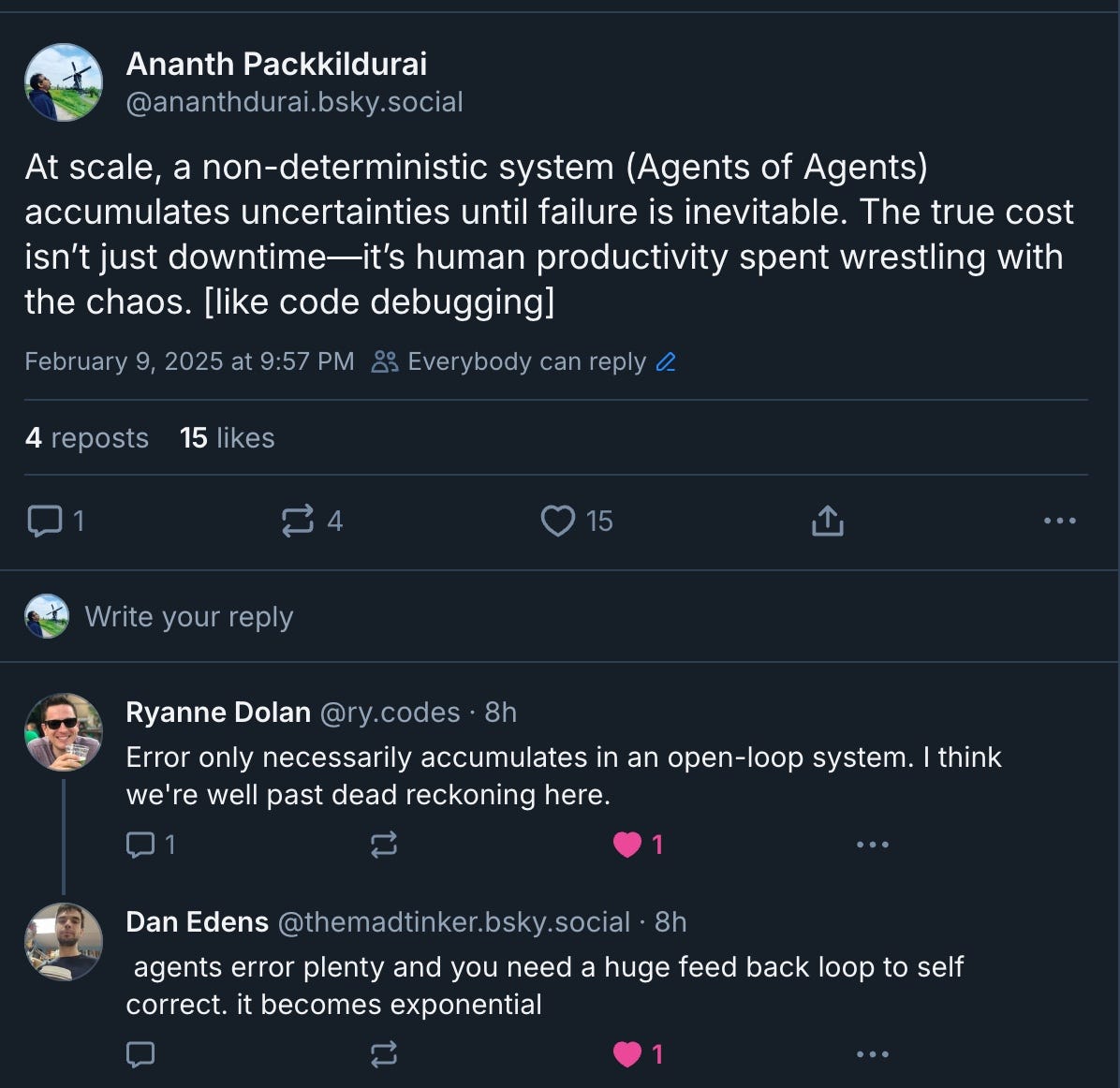

Multi-agent systems present significant challenges, including an inherent tendency for complexity to accumulate over time, which can ultimately lead to system failures. The associated costs go beyond mere downtime, including the significant human overhead needed for debugging and maintenance.

While open-loop designs are especially prone to unbounded error accumulation, closed-loop approaches demand robust feedback mechanisms that may add further layers of complexity. Ultimately, these feedback loops can become exponentially complex, compounding the difficulties of scaling and managing agent-based architectures. The key to the continuous adoption of agents is how the multi-agent system becomes more deterministic or how a business learns to operate in a non-deterministic system.

The Ascent Continues

We stand at the threshold of a new AI epoch. Learning from the limitations of static chain-of-thought models, researchers have developed ReAct to ground reasoning in external reality, VOYAGER to ensure continual growth, MemGPT to enable near-infinite recall, and SWE-bench to validate performance on real, messy tasks. Model distillation wraps these pillars together, ensuring that each generation of AI can stand on the shoulders of its predecessors.

While full-fledged Artificial General Intelligence remains an ambitious target, the cumulative impact of these advances cannot be overstated. They illuminate a path where AI agents evolve from mere tools into collaborative problem-solvers, capable of navigating the complexities of our world and, in many ways, exceeding the imagination of their original creators.

References

ReAct: Synergizing Reasoning and Acting in Language Models [https://arxiv.org/abs/2210.03629]

Voyager: An Open-Ended Embodied Agent with Large Language Models [https://arxiv.org/abs/2305.16291]

SWE-bench: Can Language Models Resolve Real-World GitHub Issues? [https://arxiv.org/abs/2310.06770]

MemGPT: Towards LLMs as Operating Systems [https://arxiv.org/abs/2310.08560]

Chain-of-Verification Reduces Hallucination in Large Language Models [https://arxiv.org/abs/2309.11495]

The agent economy [https://www.felicis.com/insight/the-agent-economy]