Data Engineering Weekly #164

The Weekly Data Engineering Newsletter

al6z: 16 Changes to the Way Enterprises Are Building and Buying Generative AI

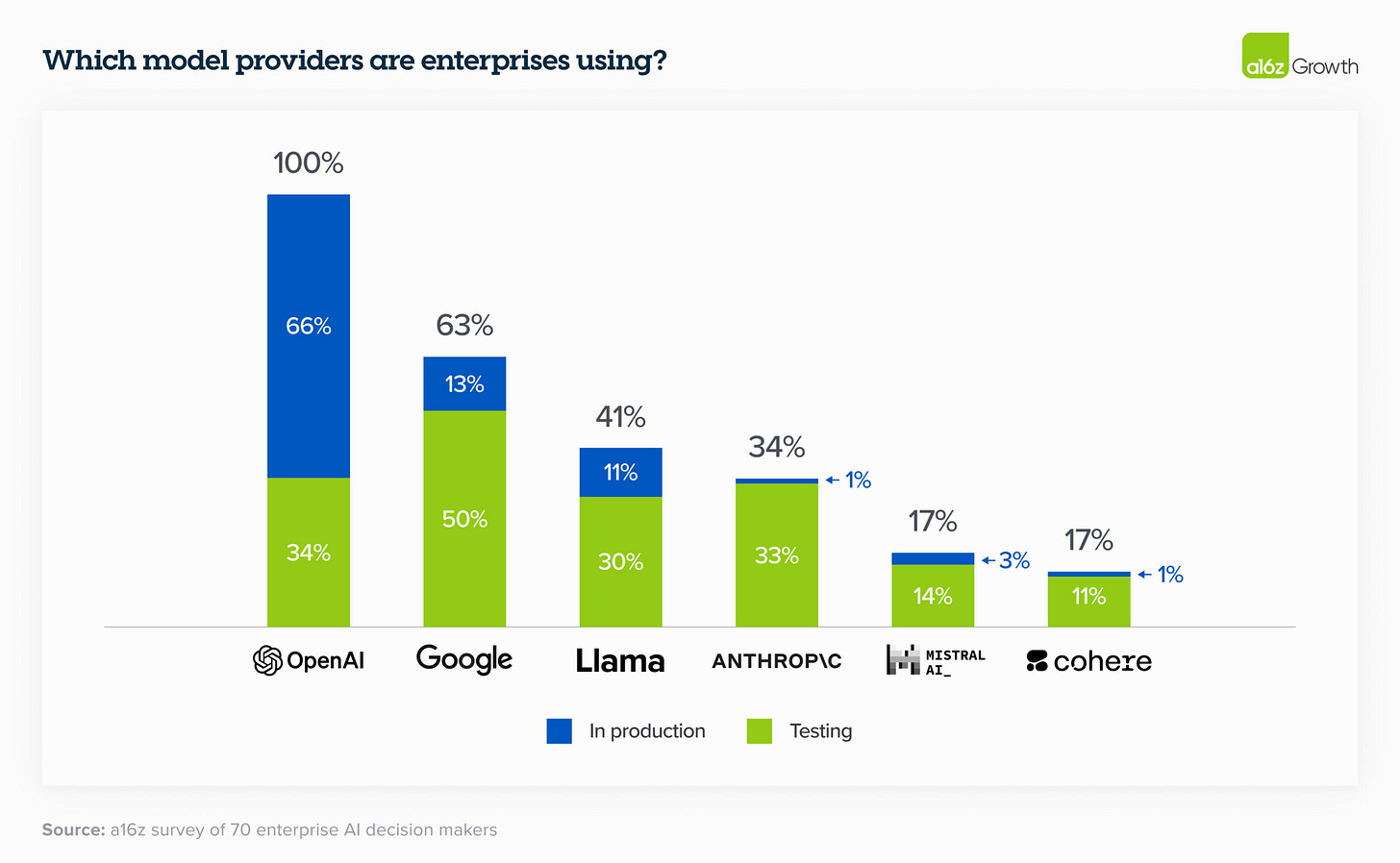

This report has a lot of interesting insight into the enterprise adoption of Gen AI. Companies are more open to adopting Gen AI for their internal use cases but have reservations about rolling it out to their clients. The Gen AI budget is now rolling into regular software budgeting rather than an experimental budget. OpenAI has more production deployments, but Google is catching on to it.

https://a16z.com/generative-ai-enterprise-2024/

Kai Waehner: The Data Streaming Landscape 2024

This is a comprehensive overview of the state of the data streaming landscape in 2024. As we predicted in the key trends of 2023 about Apache Flink as a clear winner in the stream processing frameworks, we see Confluent offering Flink as a service. The author goes beyond comparing the tools to various offerings from streaming vendors in stream processing and Kafka protocol-supported systems.

https://kai-waehner.medium.com/the-data-streaming-landscape-2024-6e078b1959b5

Uber: Model Excellence Scores - A Framework for Enhancing the Quality of Machine Learning Systems at Scale

Uber writes about Model Excellence Scores (MES), a framework to improve the quality of machine learning (ML) systems at scale. MES framework evaluates model quality across various lifecycle stages—prototyping, training, deployment, and prediction—using Service Level Agreement (SLA) principles. MES measures and enforces quality through indicators, objectives, and agreements, enhancing visibility, fostering a quality-focused culture, and substantially improving prediction performance and operational efficiency.

https://www.uber.com/en-SG/blog/enhancing-the-quality-of-machine-learning-systems-at-scale/

Sponsored: Embedded Analytics and AI Chatbot on one semantic layer

AI Chatbot for Embedded Analytics powered by a Semantic Layer

Spyne, a SaaS company, uses Cube's semantic layer to enhance customer-embedded analytics by incorporating an AI chatbot. Dive into Spyne's experience with:

- Their search for query acceleration with pre-aggregations and caching

- Developing new functionality with Open AI

- Optimizing query cost with their data warehouse

https://cube.dev/case-studies/embedded-analytics-and-ai-chatbot-on-one-semantic-layer

Suresh Hasuni: Cost Optimization Strategies for Scalable Data Lakehouse

Cost is the major concern as the adoption of data lakes increases. The author writes excellent tips on optimizing Apache Hudi LakeHouse infrastructure by adopting indexes, partition pruning, handling incomplete versions, etc.

https://blogs.halodoc.io/data-lake-cost-optimisation-strategies/amp/

Salesforce: Moirai - A Time Series Foundation Model for Universal Forecasting

Salesforce AI Research introduced Moirai, a foundation model for universal time series forecasting comprising 27 billion observations spanning nine domains. It addresses challenges across multiple domains and frequencies with zero-shot forecasting capabilities. Moirai utilizes a large, diverse dataset and innovative techniques like any-variate attention and multiple patch-size projection layers to model complex, variable patterns. This approach allows Moirai to deliver superior forecasting performance without needing domain-specific models, demonstrating its effectiveness in familiar and novel scenarios.

https://blog.salesforceairesearch.com/moirai/

Meta: Logarithm - A logging engine for AI training workflows and services

Logarithm indexes 100+GB/s of logs in real-time and thousands of queries a second!!! The logging engine to debug AI workflow logs is an excellent system design study if you’re interested in it. At a high level, Logarithm work as follows,

Application processes emit logs using logging APIs. The APIs support emitting unstructured log lines and typed metadata key-value pairs (per line).

A host-side agent discovers the format of lines and parses lines for common fields, such as timestamp, severity, process ID, and call site.

The resulting object is buffered and written to a distributed queue (for that log stream), providing durability guarantees with days of object lifetime.

Ingestion clusters read objects from queues and support additional parsing based on user-defined regex extraction rules. The extracted key-value pairs are written to the line’s metadata.

Query clusters support interactive and bulk queries on one or more log streams with predicate filters on log text and metadata.

Freshworks: Modernizing analytics data ingestion pipeline from legacy engine to distributed processing engine

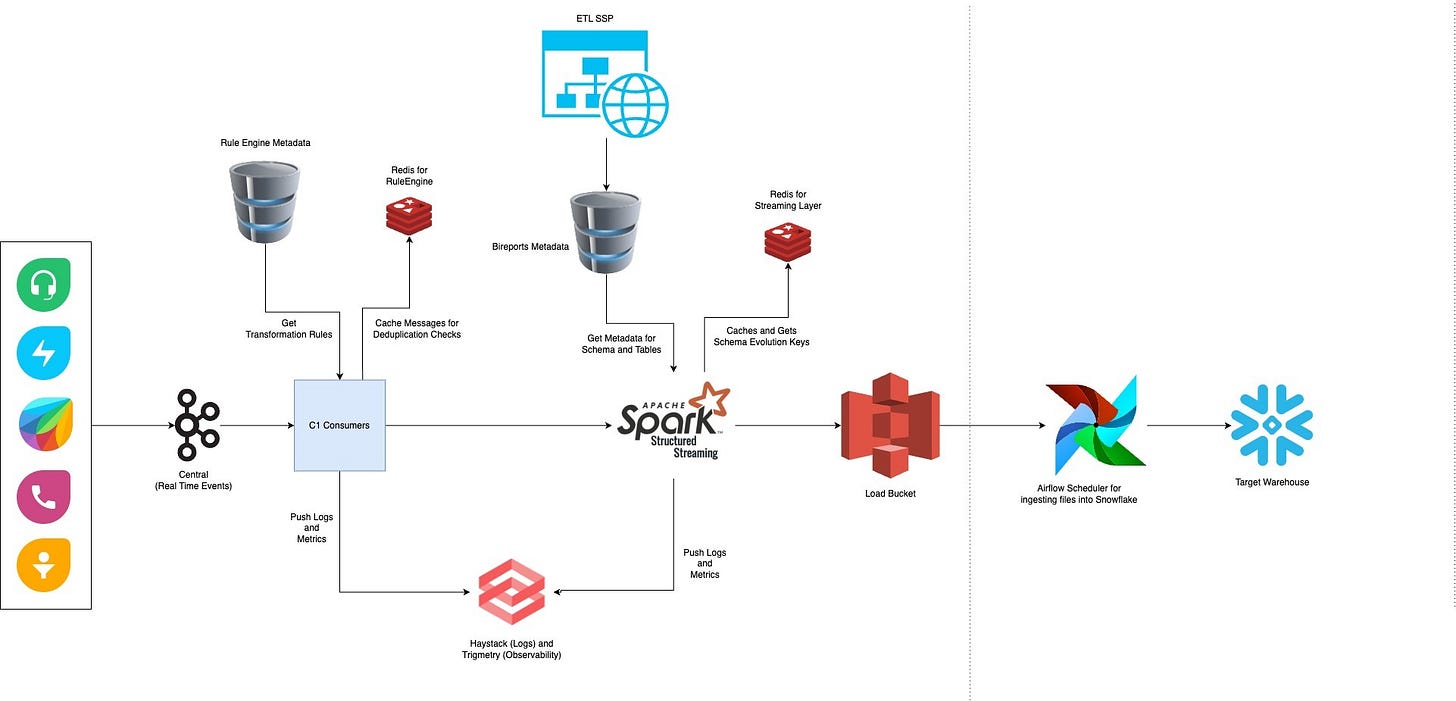

The article discusses Freshworks' journey in modernizing its analytics data platform to handle increasing volumes of data efficiently. Initially using a legacy system that involved a mix of Python consumers, Ruby on Rails, and Apache Airflow for data ingestion and processing, Freshworks transitioned to a more scalable, distributed, and auto-scalable system using Apache Spark. This new system improved scalability, maintenance, cost-effectiveness, and performance, demonstrating significant savings and operational efficiencies in handling millions of messages per minute for analytics purposes.

Mercado Libre Tech: Data Mesh @ MELI - Building Highways for Thousands of Data Producers

Ok, Data Mesh is still alive!! Data Mesh is certainly a hotly debated topic in the data industry. Mercado Libre writes about its adoption of Data Mesh, which, necessitated by the company's rapid growth and diverse ecosystem, decentralizes data production across domains, leveraging cultural shifts and technological advancements for independent data management. Their implementation involved creating Data Mesh Environments (DMEs) for better infrastructure, ensuring homogeneous technology use, and establishing a singular user access point, significantly enhancing scalability and governance while supporting continuous innovation.

Lyft: Lyft’s Reinforcement Learning Platform

Lyft developed a reinforcement learning (RL) platform, particularly focusing on Contextual Bandits, to address decision-making challenges unsuited for supervised learning or optimization models. Lyft’s RL approach involves testing actions, observing feedback through rewards, and updating models for improved decision-making, enabling continuous learning and adaptation to changing environments. The platform leverages its existing ML ecosystem, extends it for RL models, and incorporates lessons from deploying RL in real-world applications, emphasizing the power and challenges of RL in dynamic decision-making and optimization.

https://eng.lyft.com/lyfts-reinforcement-learning-platform-670f77ff46ec

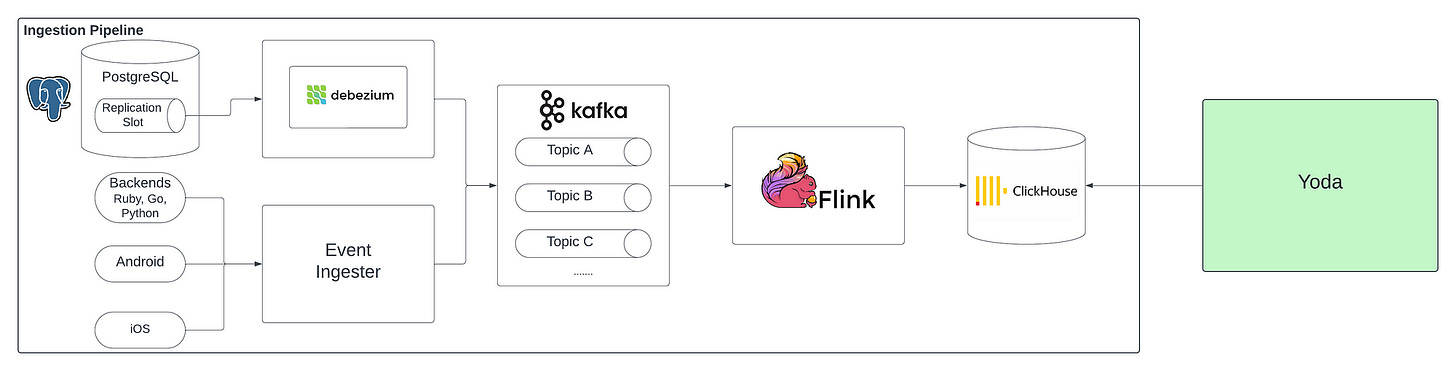

Instacart: Real-time Fraud Detection with Yoda and ClickHouse

Instacart introduced its Fraud Platform, Yoda, utilizing ClickHouse as its real-time datastore to quickly detect and respond to various fraud types, from fake accounts to payment fraud. Yoda enables analysts to create rules that differentiate legitimate from fraudulent activities, taking actions such as blocking transactions or disabling accounts. ClickHouse's data storage and processing efficiency, coupled with a self-serve, flexible system for fraud detection, significantly enhances Instacart's ability to maintain a trustworthy environment and safeguard its financial health.

https://tech.instacart.com/real-time-fraud-detection-with-yoda-and-clickhouse-bd08e9dbe3f4

All rights reserved ProtoGrowth Inc, India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employer” opinions.