Intuit: How Intuit data analysts write SQL 2x faster with the internal GenAI tool

The productivity increase with GenAI is undeniable, and several startups are trying to solve the Text2SQL generation problem. Intuit wrote an exciting article about what it learned from rolling out the internal GenAI tool. My key highlight is that Excellent data documentation and “clean data” improve results. The blog further emphasizes its increased investment in Data Mesh and clean data.

Databricks: PySpark in 2023 - A Year in Review

Can we safely say PySpark killed Scala-based data pipelines? The blog is an excellent overview of all the improvements made to PySpark in 2023. I’m looking forward to playing around with Testing API and Arrow-optimized UDF since UDF is the only reason I write Scala nowadays.

https://www.databricks.com/blog/pyspark-2023-year-review

Uber: Scaling AI/ML Infrastructure at Uber

The advancement in AI/ML brings significant challenges for infrastructure to scale and support. The challenge multiplies when designing a singular AI/ML system amid rapid application and model advancements, from XGboost to deep learning recommendation and large language models. The blog narrates how Uber utilizes the existing infrastructure to support various AI/ML use cases.

https://www.uber.com/blog/scaling-ai-ml-infrastructure-at-uber/

Sponsored: Accelerate Your Data Apps to Sub-Second Query Performance with Cube Cloud

Learn strategies to overcome slow queries in this guide on optimizing your data reporting for speed and responsiveness, aligning perfectly with your users' expectations.

https://cube.dev/blog/sub-second-query-performance-for-data-apps

Netflix: Navigating the Netflix Data Deluge - The Imperative of Effective Data Management

Organizations continuously generate data on a massive scale. I often noticed that the derived data is always > 10 times larger than the warehouse's raw data. The Netflix blog emphasizes the importance of finding the zombie data and the system design around deleting unused data.

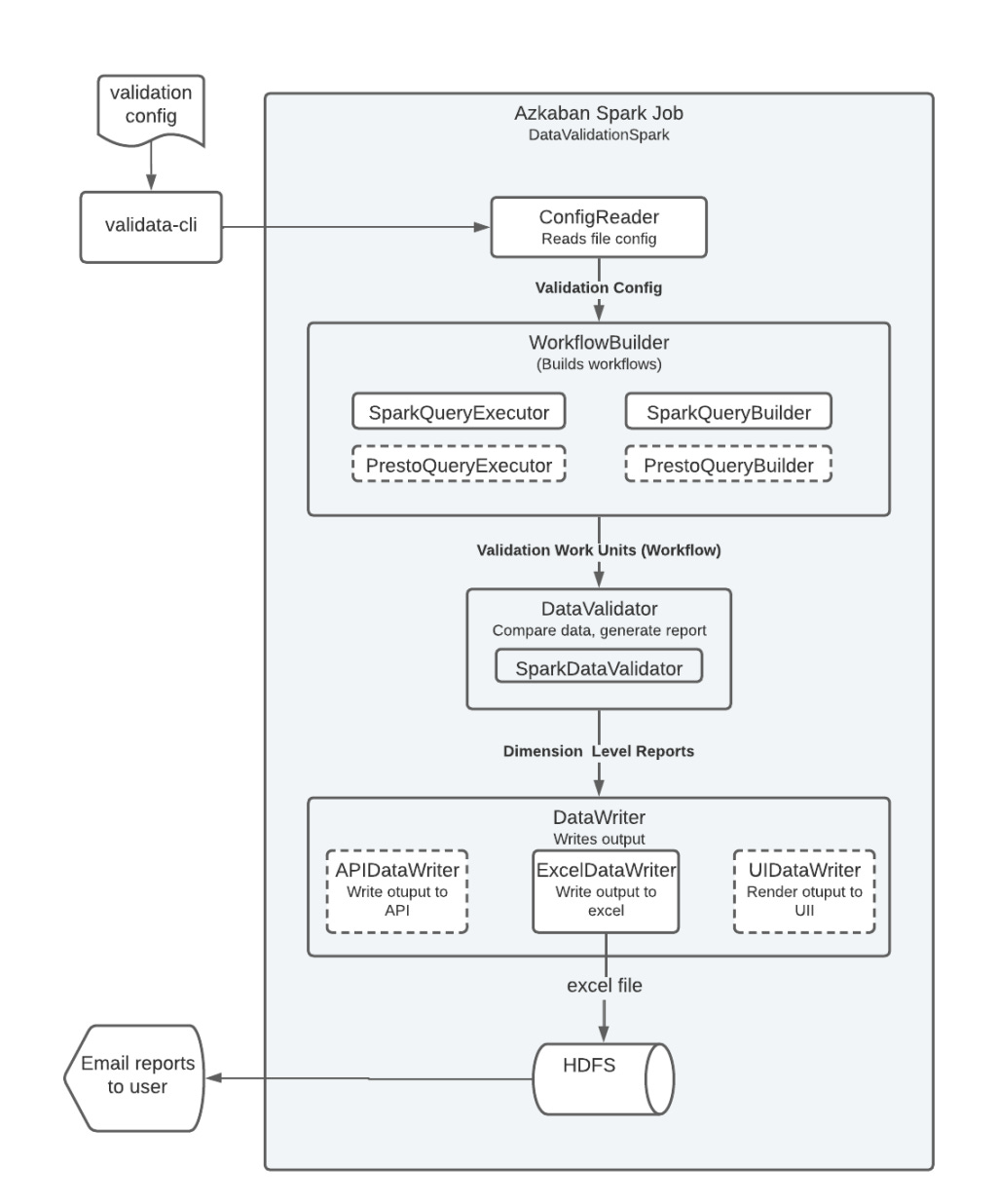

LinkedIn: Scalable Automated Config-Driven Data Validation with ValiData

One of the reasons why I advocate strongly and built solutions around data products, and contracts are that I firmly believe the data quality should be expressed from the data consumers' perspective. Data is only as good as the business value it provides, and the business value can only be seen from the consumer's perspective.

I am delighted that LinkedIn’s ValiData is focused on simplifying the data validation process through simple configuration files.

DoorDash: Setting Up Kafka Multi-Tenancy

DoorDash writes an insightful article about setting Multi-Tenancy in the Kafka cluster and the role of a consumer proxy in setting this up. The problem statement is certainly pretty interesting.

Traditionally, an isolated environment such as staging is used to validate new features. But setting up a different data traffic pipeline in a staging environment to mimic billions of real-time events is difficult and inefficient, while requiring ongoing maintenance to keep data up-to-date. To address this challenge, the team at DoorDash embraced testing in production via a multi-tenant architecture, which leverages a single microservice stack for all kinds of traffic including test traffic.

https://doordash.engineering/2024/03/27/setting-up-kafka-multi-tenancy/

AWS: Exploring real-time streaming for generative AI Applications

This article explores how real-time streaming can significantly enhance generative AI applications by providing immediate, contextually relevant data for better adaptability and performance across various tasks. Is Real-time data streaming essential for maintaining the accuracy and relevance of AI-driven applications? So far, I think Gen AI applications are a request/ response model. Please comment with your thoughts on the role of real-time streaming in the Gen AI application development lifecycle.

https://aws.amazon.com/blogs/big-data/exploring-real-time-streaming-for-generative-ai-applications/

Jet Brains: Polars vs. Pandas: What’s the Difference?

Python took over the data world, hands down, as the conversation around Polars vs. Pandas increased. But what is the difference between Polars and Pandas? Polars written in Rust offer 5-10X more performance than Pandas!! The trend is a simple example of the rise of Rust in building data infrastructure. The PyO3 native Python crate for Rust makes building Python binding much simpler, which increases the adoption of an architecture pattern using the mix of Rust and Python.

https://blog.jetbrains.com/dataspell/2023/08/polars-vs-pandas-what-s-the-difference/

Yelp: Phone Number Masking for Yelp Services Projects

An exciting case study from Yelp on designing privacy-preserving system design. Yelp writes about the phone number masking feature for its Services Marketplace, aiming to protect consumer privacy and build trust by allowing users and service professionals to communicate via phone calls and SMS without exchanging real phone numbers. The system design around phone number recycling and reuse is an exciting read.

https://engineeringblog.yelp.com/2024/03/phone-number-masking-for-yelp-services-projects.html

Harish Kumar M: Julian to Gregorian - Timestamp Anomalies in Trino-Spark

The blog is a gentle reminder for our readers about all the nightmares around the timestamp in various processing engines. The blog discusses inaccuracies caused by int96 Parquet datatype, particularly in data created by Apache Spark. The author recommends using the following Spark config change as a fix.

spark.sql.parquet.outputTimestampType=TIMESTAMP_MICROSAll rights reserved ProtoGrowth Inc, India. I have provided links for informational purposes and do not suggest endorsement. All views expressed in this newsletter are my own and do not represent current, former, or future employer” opinions.

Whats the criteria of selection?